9 Chunking Strategies to Improve RAG Performance

NBD Lite #52 How you splitting your data can affect the RAG performance

All the code used here is present in the RAG-To-Know repository.

Retrieval-augmented generation (RAG) combines data retrieval with LLM-based generation, producing output based on the context from its retrieved chunk.

In our previous article, we discussed how to evaluate our RAG system to make it more trustworthy. However, we can still improve its performance by managing the chunking strategy.

Explainable RAG for More Trustworthy System

All the code used here is present in the RAG-To-Know repository.

When we talk about chunks, we refer to the segmented portion of text from a larger document or dataset split with certain rules and strategies that will be indexed for the RAG knowledge base. For example, a simple split below results in two different chunks.

Chunk is effectively used as a manageable embedding, indexing, and retrieval unit. The "retrieval unit" finds relevant context for a given query. Rather than passing all the documents into the generative model, passing the most pertinent passage is much more efficient.

Many chunking strategies are available, each offering advantages and disadvantages.

In this article, we will explore nine different chunking strategies and how to implement them. Here is an infographic summarizing our discussion.

Curious about it? Let’s get into it!

Introduction to Chunking

As mentioned above, chunking is breaking down large documents into manageable units. We try to extract and restructure the document into multiple parts that the RAG system will retrieve according to the query input.

Remember, RAG functions by embedding a query during inference and retrieving the top-k most relevant chunks from the database. These chunks serve as context for the generative model to generate a grounded and pertinent response.

Chunks can range from sentences to paragraphs or sections, depending on the use case and the splitting rule. These rules are what we called the chunking strategy.

There are many strategies for performing chunking, each with advantages and disadvantages. Let’s explore each strategy.

1. Fixed-Size Chunking

Fixed-size chunking is a strategy for dividing text into segments of uniform length, such as by word count, token count, or character count. For example, the document might be split into chunks of 200 words each.

This method relies on straightforward text division, making it easy to implement. It is often used when consistent input size is critical, such as feeding data into machine learning models with fixed input dimensions.

Advantages:

Simplicity and Speed: Requires minimal computational resources or linguistic analysis, allowing fast implementation.

Predictability: Produces uniform chunk sizes, simplifying storage, indexing, and retrieval in databases.

Disadvantages:

Context Fragmentation: Often splits sentences or paragraphs that will disrupt semantic meaning.

Rigidity: Fails to adapt to the natural flow of text, potentially isolating related concepts into separate chunks.

We can easily implement the Fixed-Size chunking strategy with the following code.

def fixed_size_chunk(text, chunk_size=500):

words = text.split()

return [" ".join(words[i:i+chunk_size]) for i in range(0, len(words), chunk_size)]

chunks = fixed_size_chunk(pdf_text, chunk_size=500)2. Sentence-Based Chunking

Sentence-based chunking is a strategy for dividing text based on sentence boundaries. We can rely on simple rules such as a dot symbol or use NLP libraries such as spaCy or NLTK to identify the sentence boundary.

This approach respects grammatical and contextual units, making it suitable for tasks like translation or sentiment analysis where sentence integrity matters.

Advantages:

Semantic Preservation: Maintains full sentences, reducing the risk of meaning loss.

Natural Boundaries: Aligns with human-readable structures, improving interpretability.

Disadvantages:

Variable Chunk Sizes: Short sentences create tiny chunks, while long sentences may exceed desired size limits.

Dependency on NLP Tools: Accuracy depends on the quality of sentence tokenizers, which may struggle with complex punctuation or non-standard text.

We can use the following Python code to perform the Sentence-Based chunking with the spaCy library.

import spacy

nlp = spacy.load("en_core_web_sm")

def sentence_chunk(text):

doc = nlp(text)

return [sent.text.strip() for sent in doc.sents]

chunks = sentence_chunk(pdf_text)3. Semantic-Based Chunking

Semantic-based chunking is a strategy for grouping text by meaning. It often uses embeddings to calculate similarity between sentences or paragraphs.

Clusters are formed based on semantic thresholds (e.g., cosine similarity), ensuring chunks are grouped semantically.

Advantages:

Contextual Relevance: Produces chunks with strong semantic relation, ideal for retrieval-augmented generation (RAG).

Adaptability: Dynamically adjusts to content, avoiding rigid size constraints.

Disadvantages:

Computational Cost: Embedding generation and similarity calculations are resource-intensive.

Threshold Sensitivity: Requires tuning to balance chunk size and relevance, risking over-fragmentation or over-aggregation.

We can use the following code to perform semantic-based chunking.

import spacy

from sentence_transformers import SentenceTransformer, util

nlp = spacy.load("en_core_web_sm")

model = SentenceTransformer("all-MiniLM-L6-v2")

def semantic_embedding_chunk(text, threshold=0.75):

"""

Splits text into semantic chunks using sentence embeddings.

Uses spaCy for sentence segmentation and SentenceTransformer for generating embeddings.

:param text: The full text to chunk.

:param threshold: Cosine similarity threshold for adding a sentence to the current chunk.

:return: A list of semantic chunks (each as a string).

"""

# Sentence segmentation

doc = nlp(text)

sentences = [sent.text.strip() for sent in doc.sents if sent.text.strip()]

chunks = []

current_chunk_sentences = []

current_chunk_embedding = None

for sentence in sentences:

# Generate embedding for the current sentence

sentence_embedding = model.encode(sentence, convert_to_tensor=True)

# If starting a new chunk, initialize it with the current sentence

if current_chunk_embedding is None:

current_chunk_sentences = [sentence]

current_chunk_embedding = sentence_embedding

else:

# Compute cosine similarity between current sentence and the chunk embedding

sim_score = util.cos_sim(sentence_embedding, current_chunk_embedding)

if sim_score.item() >= threshold:

# Add sentence to the current chunk and update the chunk's average embedding

current_chunk_sentences.append(sentence)

num_sents = len(current_chunk_sentences)

current_chunk_embedding = ((current_chunk_embedding * (num_sents - 1)) + sentence_embedding) / num_sents

else:

# Finalize the current chunk and start a new one

chunks.append(" ".join(current_chunk_sentences))

current_chunk_sentences = [sentence]

current_chunk_embedding = sentence_embedding

# Append the final chunk if it exists

if current_chunk_sentences:

chunks.append(" ".join(current_chunk_sentences))

return chunks

semantic_chunks = semantic_embedding_chunk(pdf_text, threshold=0.75)

for i, chunk in enumerate(semantic_chunks):

print(f"Chunk {i+1}:\n{chunk}\n{'-'*60}")Adjusting the threshold allows us to experiment on how much the chunk will be grouped.

4. Recursive Chunking

Recursive chunking is a strategy where we iteratively split text (e.g., by paragraph, sentence, word) until chunks meet size limits. For example, if needed, a long paragraph might be divided into sentences and smaller token groups.

Advantages:

Size Compliance: Guarantees chunks adhere to strict size constraints.

Flexibility: Handles variable-length text gracefully.

Disadvantages:

Fragmentation Risk: Over-splitting can disrupt coherence.

Inefficiency: Multiple recursion levels increase processing time.

Code wise, we can implement the recursive chunking with the following code.

import PyPDF2

def iterative_chunk(text, max_length=500):

chunks = []

while len(text) > max_length:

separators = ["\n\n", "\n", " ", ""]

found = False

for sep in separators:

if sep == "":

# If no separator found, just cut the text at max_length.

chunk = text[:max_length]

chunks.append(chunk.strip())

text = text[max_length:]

found = True

break

idx = text.rfind(sep, 0, max_length)

if idx != -1 and idx != 0:

chunk = text[:idx]

chunks.append(chunk.strip())

text = text[idx:]

found = True

break

if not found:

# If no suitable separator is found, just break at max_length.

chunk = text[:max_length]

chunks.append(chunk.strip())

text = text[max_length:]

if text.strip():

chunks.append(text.strip())

return chunks

chunks = iterative_chunk(pdf_text, max_length=500)You can always tweak the logic to find which recursive pattern suits your work.

5. Sliding-Window Chunking

A sliding-window chunking strategy is a strategy where the chunk has overlapping segments by moving a fixed-size window across the text (e.g., a 200-token window with a 50-token overlap).

This redundancy ensures context spans adjacent chunks and mitigates edge-related information loss.

Advantages:

Context Continuity: Overlaps preserve relationships between ideas split across chunks.

Flexibility: Adjustable window and overlap sizes suit different use cases.

Disadvantages:

Redundancy: Duplicate content increases storage costs and complicates deduplication.

Computational Load: Overlapping regions multiply processing steps for downstream tasks.

The Python code implementation looks like this.

import PyPDF2

def sliding_window_chunk(text, window_size=100, overlap=20):

words = text.split()

chunks = []

step = window_size - overlap

for i in range(0, len(words), step):

chunk = " ".join(words[i:i+window_size])

chunks.append(chunk)

return chunks

chunks = sliding_window_chunk(pdf_text, window_size=100, overlap=20)6. Hierarchical Chunking

Hierarchical chunking using a document’s structure (headings, sections) to create nested chunks.

For example, a chapter (parent chunk) contains subsections (child chunks) containing paragraphs. This structure mirrors organizational frameworks like XML/HTML trees.

Advantages:

Structural Faithfulness: Preserves logical flow and multi-level context.

Multi-Granularity Access: Supports drilling down from broad themes to specifics.

Disadvantages:

Structure Dependency: Requires well-formatted documents; struggles with unstructured text.

Implementation Complexity: Needs robust detection of headings, lists, or markup tags.

We can do two types for the hierarchical chunking strategy: Manual and Automatic.

Manual hierarchical chunking splits the document based on certain markers, such as the introduction or conclusion.

# Manual Hierarchical Chunking

import PyPDF2

def hierarchical_chunk_manual(text, markers=["INTRODUCTION", "CONCLUSION"]):

"""

Splits text into chunks based on manually provided markers.

"""

lines = text.splitlines()

chunks = []

current_chunk = []

for line in lines:

# If any manual marker is found in the line and there is already accumulated content

if any(marker in line for marker in markers) and current_chunk:

chunks.append("\n".join(current_chunk).strip())

current_chunk = [line]

else:

current_chunk.append(line)

if current_chunk:

chunks.append("\n".join(current_chunk).strip())

return chunks

manual_chunks = hierarchical_chunk_manual(pdf_text, markers=["INTRODUCTION", "CONCLUSION"])In contrast, automatic hierarchical chunking detects the structure based on the logic we create. You can even use LLM to do that.

# Automatically Hierarirchal Chunking

def detect_markers(text):

"""

Automatically detects potential header markers.

Heuristic: any short line (<= 10 words) that is either all uppercase or ends with a colon is treated as a marker.

"""

lines = text.splitlines()

markers = []

for line in lines:

words = line.split()

if words and len(words) <= 10:

if line.isupper() or line.endswith(":"):

markers.append(line.strip())

return list(set(markers))

def hierarchical_chunk_auto(text):

"""

Splits text into chunks using automatically detected markers.

Returns both the chunks and the detected markers.

"""

auto_markers = detect_markers(text)

# Sort markers in order of appearance

lines = text.splitlines()

detected = []

for line in lines:

for marker in auto_markers:

if marker in line and marker not in detected:

detected.append(marker)

chunks = []

current_chunk = []

for line in lines:

# If any detected marker is found and there is accumulated text, start a new chunk

if any(marker in line for marker in detected) and current_chunk:

chunks.append("\n".join(current_chunk).strip())

current_chunk = [line]

else:

current_chunk.append(line)

if current_chunk:

chunks.append("\n".join(current_chunk).strip())

return chunks, detected

auto_chunks, auto_markers = hierarchical_chunk_auto(pdf_text)7. Topic-Based Chunking

Like its name, the Topic-based chunking applies clustering algorithms (e.g., LDA, k-means) to group text by themes.

Chunks represent coherent topics, such as "climate change" or "market trends," identified via word frequency or embedding patterns.

Advantages:

Thematic Consistency: Enhances retrieval accuracy in topic-driven applications.

Dynamic Grouping: Adapts to document content without predefined rules.

Disadvantages:

Topic Overlap: Ambiguous text may belong to multiple clusters, requiring disambiguation.

Computational Overhead: Topic modeling scales poorly with large datasets.

You can use the following code to perform the topic-based chunking.

from sklearn.feature_extraction.text import CountVectorizer

from sklearn.decomposition import LatentDirichletAllocation

def topic_based_chunk(text, n_topics=2):

# Split text into sentences (using period followed by space as a simple splitter)

sentences = text.split('. ')

vectorizer = CountVectorizer(stop_words='english')

X = vectorizer.fit_transform(sentences)

lda = LatentDirichletAllocation(n_components=n_topics, random_state=42)

lda.fit(X)

topic_distribution = lda.transform(X)

topics = {}

for i, sent in enumerate(sentences):

topic = topic_distribution[i].argmax()

topics.setdefault(topic, []).append(sent)

return topics

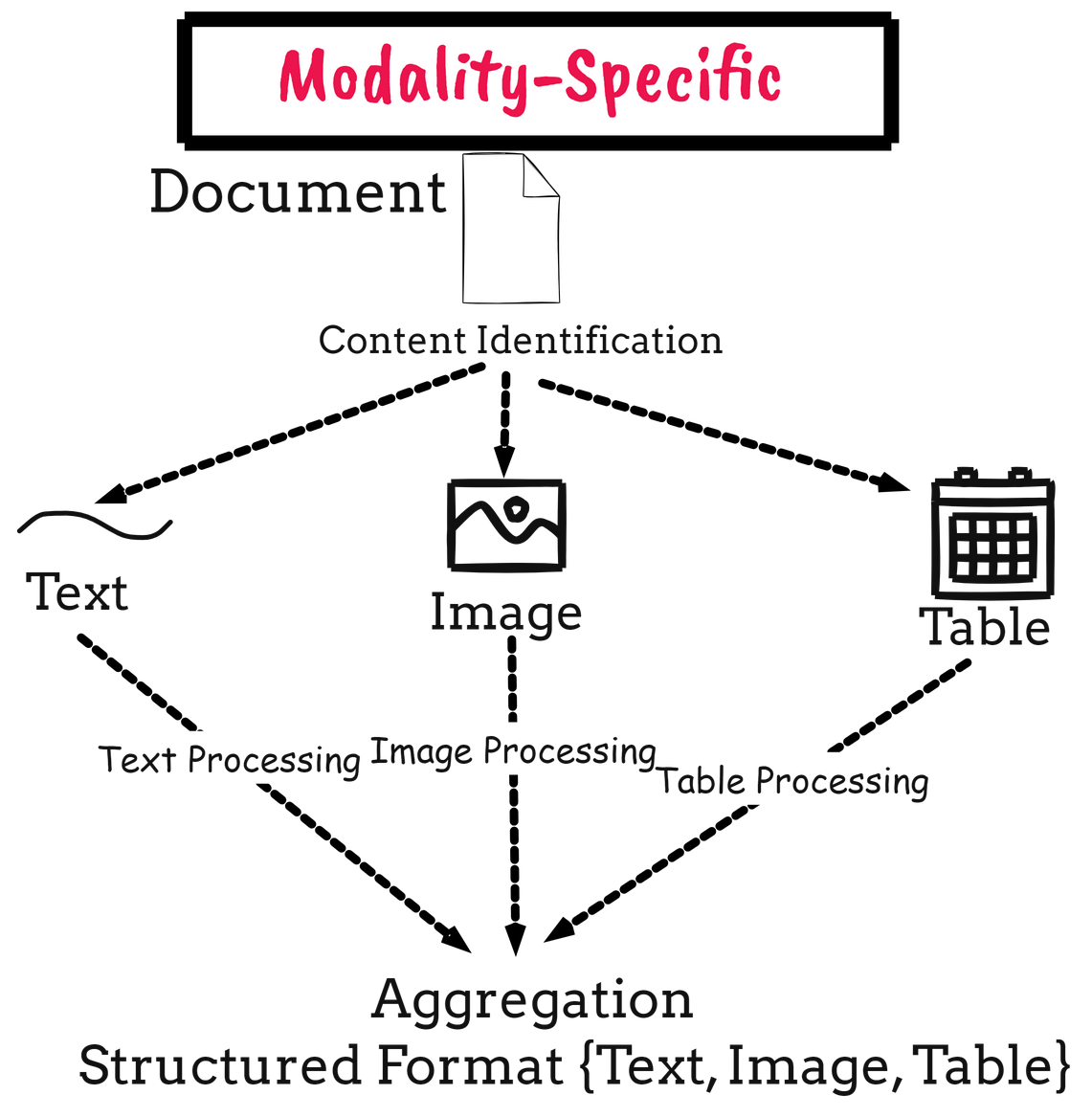

topics = topic_based_chunk(pdf_text, n_topics=2)8. Modality-Specific Chunking

This strategy separates mixed-content documents (text, images, tables) into modality-specific segments.

For example, tables are extracted as CSV files, images are PNGs, and text is paragraphs. Each modality is processed independently using specialized tools (e.g., OCR for images).

Advantages:

Preserved Integrity: Maintains the structure and meaning of non-text elements.

Tailored Processing: Enables optimized handling for each content type.

Disadvantages:

Increased Complexity: Requires pipelines for each modality and logic to reintegrate outputs.

Resource Overhead: Demands diverse tools (e.g., table extractors, image classifiers).

The following code can be used for the Mode-Specific chunking strategy. You can always tweak the pipeline, as I am using the simplest approach.

import PyPDF2

import pdfplumber

def extract_pdf_text(pdf_path):

"""

Extracts text from each page of the PDF using PyPDF2 and returns it as one string.

"""

reader = PyPDF2.PdfReader(pdf_path)

full_text = ""

for page in reader.pages:

page_text = page.extract_text()

if page_text:

full_text += page_text + "\n\n" # Separate pages with double newlines

return full_text

def extract_tables(pdf_path):

"""

Extracts tables from the PDF using pdfplumber.

Returns a list of tables (each table is a list of lists).

"""

tables = []

with pdfplumber.open(pdf_path) as pdf:

for page in pdf.pages:

page_tables = page.extract_tables()

for table in page_tables:

tables.append(table)

return tables

def extract_images(pdf_path):

"""

Extracts images from the PDF using PyPDF2.

Note: PyPDF2 has limited image extraction capabilities.

Returns a list of dictionaries with image data and metadata.

"""

reader = PyPDF2.PdfReader(pdf_path)

images = []

for page_num, page in enumerate(reader.pages):

resources = page.get("/Resources")

if resources and "/XObject" in resources:

xObject = resources["/XObject"].get_object()

for obj in xObject:

if xObject[obj].get("/Subtype") == "/Image":

data = xObject[obj].get_data()

# Determine image type from the filter

if "/Filter" in xObject[obj]:

if xObject[obj]["/Filter"] == "/DCTDecode":

ext = "jpg"

elif xObject[obj]["/Filter"] == "/FlateDecode":

ext = "png"

else:

ext = "bin"

else:

ext = "bin"

images.append({"page": page_num, "data": data, "ext": ext})

return images

def modality_chunk(pdf_path):

"""

Extracts text, tables, and images from a PDF file.

- Text is split into paragraphs (using double newlines).

- Tables are extracted using pdfplumber.

- Images are extracted using PyPDF2.

Returns a dictionary with keys: "text_chunks", "tables", and "images".

"""

text = extract_pdf_text(pdf_path)

text_chunks = [p.strip() for p in text.split("\n\n") if p.strip()]

tables = extract_tables(pdf_path)

images = extract_images(pdf_path)

return {"text_chunks": text_chunks, "tables": tables, "images": images}

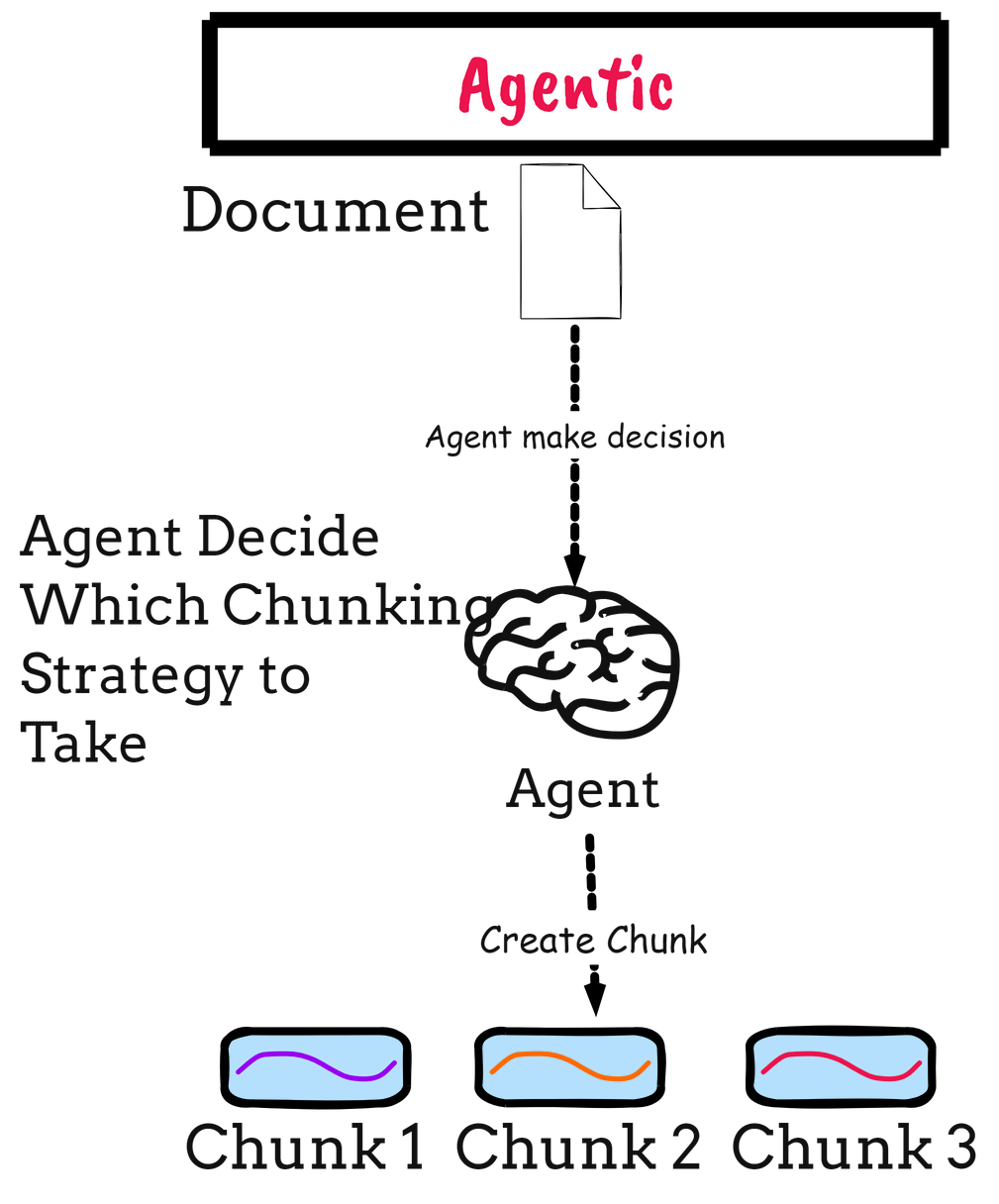

result = modality_chunk(pdf_file)9. Agentic Chunking

Agentic chunking employs LLMs and Agents to identify breakpoints or decisions on which technique to use dynamically. The method relies on agents to perform what humans would do if we split the documents.

Advantages:

Context-Awareness: Yields human-like, nuanced chunks.

Adaptive: Adjusts to document genre, style, and complexity.

Disadvantages:

Cost and Latency: Multiple LLM calls increase expenses and processing time.

Prompt Sensitivity: Output quality depends on carefully crafted prompts.

In the code below, I am based on the Agentic Chunking on the Ranjith method to perform chunking based on the proposition, which the LLM develop and creates the chunk group using the agent.

import uuid

import google.generativeai as genAI

import time

from typing import List, Dict

import os

class AgenticChunker:

def __init__(self):

self.chunks = {} # chunk information

self.agent = genAI.GenerativeModel("gemini-2.0-flash") # Updated model name

self.chunk_id_length = 5 # For truncating the Chunk ID

self.configure = genAI.configure(api_key="YOUR-API-KEY")

def add_propositions(self, propositions: List[str]):

"""Add multiple propositions with rate limiting"""

for idx, proposition in enumerate(propositions):

print(f"Processing proposition {idx+1}/{len(propositions)}")

self.add_proposition(proposition)

time.sleep(4) # Wait 4 seconds between propositions to respect 15 rpm limit

def add_proposition(self, proposition: str):

"""Add a single proposition to the appropriate chunk"""

print(f"Evaluating: {proposition[:50]}...") # Show truncated proposition

if not self.chunks:

print("No existing chunks - creating first chunk")

self.create_new_chunk(proposition)

return

relevant_chunk_id = self.find_relevant_chunk(proposition)

if relevant_chunk_id:

print(f"Adding to existing chunk: {self.chunks[relevant_chunk_id]['title']}")

self.add_proposition_to_chunk(relevant_chunk_id, proposition)

else:

print("Creating new chunk for proposition")

self.create_new_chunk(proposition)

def add_proposition_to_chunk(self, chunk_id: str, proposition: str):

"""Add proposition to existing chunk and update metadata"""

self.chunks[chunk_id]["propositions"].append(proposition)

# Batch updates to reduce API calls: update when a multiple of 3 propositions are reached

if len(self.chunks[chunk_id]["propositions"]) % 3 == 0:

self.chunks[chunk_id]["summary"] = self.update_chunk_summary(self.chunks[chunk_id])

self.chunks[chunk_id]["title"] = self.update_chunk_title(self.chunks[chunk_id])

def _generate_content(self, prompt: str) -> str:

"""Wrapper for Gemini API calls with error handling and rate limiting"""

try:

response = self.agent.generate_content(prompt)

# Wait 5 seconds after each API call to avoid exceeding rate limits

time.sleep(5)

return response.text.strip()

except Exception as e:

print(f"API Error: {str(e)}")

return "" # Return empty string to prevent pipeline failure

def update_chunk_summary(self, chunk: Dict) -> str:

"""Generate updated chunk summary"""

prompt = f"""

You are the steward of a group of chunks representing sentences about a similar topic.

A new proposition was just added. Generate a very brief 1-sentence summary that informs viewers what the chunk is about.

Only respond with the new summary, nothing else.

Chunk's propositions:

{chr(10).join(chunk['propositions'][-3:])}

Current summary: {chunk['summary'] if 'summary' in chunk else ''}

"""

return self._generate_content(prompt)

def update_chunk_title(self, chunk: Dict) -> str:

"""Generate updated chunk title"""

prompt = f"""

You are the steward of a group of chunks representing related propositions.

A new proposition was just added. Generate a very brief updated chunk title (2-4 words) that summarizes the chunk's theme.

Only respond with the new title, nothing else.

Chunk's propositions:

{chr(10).join(chunk['propositions'][-3:])}

Chunk summary: {chunk['summary'] if 'summary' in chunk else ''}

Current chunk title: {chunk['title'] if 'title' in chunk else ''}

"""

return self._generate_content(prompt)

def get_new_chunk_summary(self, proposition: str) -> str:

"""Generate initial summary for a new chunk"""

prompt = f"""

You are the steward of a group of chunks representing groups of sentences that talk about a similar topic.

Generate a very brief 1 to 2-sentence summary that describes the topic of the new chunk based on the following proposition.

Only respond with the new chunk summary, nothing else.

Proposition:

{proposition}

"""

return self._generate_content(prompt)

def get_new_chunk_title(self, summary: str) -> str:

"""Generate initial title for a new chunk based on its summary"""

prompt = f"""

You are the steward of a group of chunks. Based on the following summary, generate a concise title (2-4 words) that captures the essence of the chunk.

Only respond with the new chunk title, nothing else.

Chunk summary:

{summary}

"""

return self._generate_content(prompt)

def create_new_chunk(self, proposition: str):

"""Create new chunk with initial proposition"""

new_chunk_id = str(uuid.uuid4())[:self.chunk_id_length] # Unique chunk id

new_chunk_summary = self.get_new_chunk_summary(proposition)

new_chunk_title = self.get_new_chunk_title(new_chunk_summary)

self.chunks[new_chunk_id] = {

'chunk_id': new_chunk_id,

'propositions': [proposition],

'title': new_chunk_title,

'summary': new_chunk_summary,

'chunk_index': len(self.chunks)

}

print(f"Created new chunk {new_chunk_id}: {new_chunk_title}")

def find_relevant_chunk(self, proposition: str) -> str:

"""Find a matching chunk for the proposition"""

prompt = f"""

Determine whether the following proposition should belong to one of the existing chunks.

If it should, return the chunk ID. If not, return 'NO_MATCH'.

Existing chunks (ID: Title - Summary):

{self._format_chunk_outline()}

Proposition:

{proposition}

Respond ONLY with the matching chunk ID or 'NO_MATCH'.

"""

response = self._generate_content(prompt)

resp = response.strip()

if resp == "NO_MATCH" or resp not in self.chunks:

return None

return resp

def _format_chunk_outline(self) -> str:

"""Format chunk information for LLM input"""

return "\n".join(

f"{c['chunk_id']}: {c['title']} - {c['summary']}"

for c in self.chunks.values()

)

def pretty_print_chunks(self):

"""Display all chunks with their metadata"""

print("\n----- Chunks Created -----\n")

for _, chunk in self.chunks.items():

print(f"Chunk ID : {chunk['chunk_id']}")

print(f"Title : {chunk['title'].strip()}")

print(f"Summary : {chunk['summary'].strip()}")

print("Propositions:")

for prop in chunk['propositions']:

print(f" - {prop}")

print("\n")

def print_chunks(self):

self.pretty_print_chunks()

chunker = AgenticChunker()

chunker.add_propositions(pdf_text)

chunker.print_chunks()There are so many things you can do with Agentic Chunking. You only need to use the logic you are comfortable with.

That’s all for now. You can check out the RAG-To-Know repository for the whole code of Chunking Strategies for RAG.

Is there anything else you’d like to discuss? Let’s dive into it together!

👇👇👇