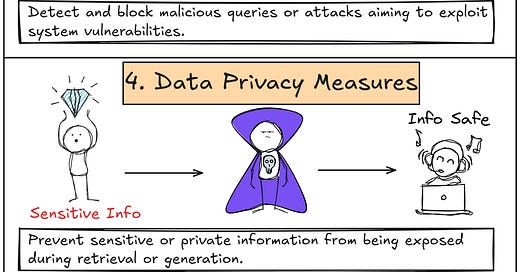

Building Guardrail Around Your RAG Pipeline

NBD Lite #48 - Why RAG Systems Fail—And How to Fortify Them

All the code used here is present in the RAG-To-Know repository.

Retrieval-augmented generation (RAG) systems have transformed how AI interacts with information. They combine the power of large language models (LLMs) with dynamic data retrieval to deliver better responses.

While we previously explored evaluating our LLM using the LLM-as-a-Judge approach and improving our RAG’s technical performance, there are still instances where the RAG pipeline may behave unpredictably or deviate from our expectations.

As the RAG systems grow more complex, so do their risks. Without proper safeguards, RAG pipelines can accidentally expose sensitive data, generate biased or toxic outputs, or fall victim to adversarial attacks.

Enter RAG Guardrails, a set of critical measures designed to secure every stage of the RAG pipeline and ensure that it operates according to our standards.

This article will explore the essential guardrails every RAG system needs, from input and output validation to adversarial protection and data privacy measures.

Let’s explore it together! Here is a diagram of what we will discuss and build today. Don’t forget all the code is stored in the RAG-To-Know repository.

Sponsor Section

Data Science Roadmap by aigents

Feeling lost in the data science jungle? 🌴 Don’t worry—we’ve got you covered!

Check out this AI-powered Data Science Roadmap 🗺️—your ultimate step-by-step guide to mastering data science! From stats 📊 to machine learning 🤖, and Python tools 🐍 to Tableau dashboards 📈, it’s all here.

✨ Why it’s awesome:

AI-powered explanations & Q&A 🤓

Free learning resources 🆓

Perfect for beginners & skill-builders 🚀

👉 Start your journey here: Data Science Roadmap

Need extra help? Try the AI-tutor for personalized guidance: AI-tutor

Let’s make data science simple and fun! 🎉

Introduction to Guardrail in RAG

As we learned previously, RAG systems combine two critical components, retrieval, and generation, to bring contextual accuracy to the outputs. However, a sophisticated system has unique challenges.

Some challenges that can happen from our RAG system include:

Hallucination from the Output,

Data Privacy and Leakage,

Vulnerabilities in the Data Source,

Bias and Toxicity,

Inconsistency.

And some other challenges might be specific to your domain and business use cases. So, how can we manage all these challenges?

Guardrails help with the problem above. We can define Guardrails as safety mechanisms or control systems implemented in RAG pipelines to ensure the system operates as the user expects.

At various pipeline stages, they act as filters, validators, and monitors to prevent problems we want to mitigate and avoid.

Why Guardrails Are Non-Negotiable

Guardrails are non-negotiable in production systems. We need safety measures that will help our systems work correctly.

Here are a few reasons we should put Guardrails in our RAG pipeline.

Safety: Guardrails protect users from harmful or inappropriate content.

Trust: They ensure the system produces reliable and ethical outputs, building user confidence.

Compliance: They help meet regulatory requirements for data privacy, fairness, and transparency.

Reputation: They prevent incidents that could damage the organization's reputation by deploying the RAG system.

Robustness: They make the system resilient to adversarial attacks and misuse.

With how vital Guardrails are to the RAG system, let’s try to build them with code implementations.

Building Guardrails in the RAG System

In a RAG system, Guardrails are generally applied to the following systems:

Inputs: Validate and sanitize user queries and retrieved data.

Outputs: Verify and moderate the generated responses.

Data Privacy: Prevent sensitive or private information from being exposed.

Adversarial Protection: Detect and block malicious attempts to exploit the system.

There could be more depending on the situation and business cases, but we will try to explore building the Guardrails from the above 4 points.

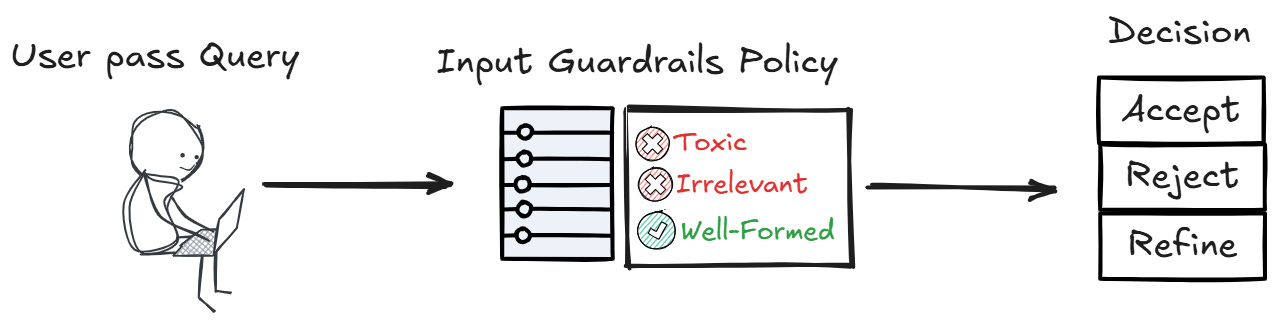

1. Inputs Guardrail

We will first discuss Input Guardrail, as they are the main access point from the external world to the system.

Input Guardrail in the RAG system ensures that user queries are safe, valid, and appropriate before the system processes them. This includes:

Detecting and blocking toxic or inappropriate content.

Validating the query for adversarial patterns or malicious intent.

Ensuring the query is well-formed and relevant to the system’s purpose.

Input Guardrail are essential to guard the entrance point for a clean, stable, and efficient RAG system.

You decide what is important and how to act on that policy. If you find toxic input, you may want to refine or reject it outright; it depends on your system.

For example, we will moderate the input query to our RAG systems, rejecting toxic content. We can employ a rule-based implementation or rely on an external library/LLM to detect them. I will use the text toxic detector by detoxify library.

from detoxify import Detoxify

# Input Guardrail: Ensure the query is free from toxic or inappropriate content.

def validate_input(query):

"""

Use detoxify to detect toxic content in the query.

"""

try:

results = Detoxify('original').predict(query)

if results['toxicity'] > 0.5: # Threshold for toxicity

raise ValueError("Query contains toxic or inappropriate content.")

return True

except Exception as e:

return f"Input guardrail error: {e}"We can pass our example “toxic” input to the system with the function above.

# Example query

query = "I don't like the insurance because it's a scam and you are f*****g liar."

result = validate_input(query)

print(result)Output>>

Input guardrail triggered: Query contains toxic or inappropriate content.Our Input Guardrails are triggered because they contain toxic input and raise value errors.

We can always tweak the function and system as we need. What’s important is that we understand that input should be controlled.

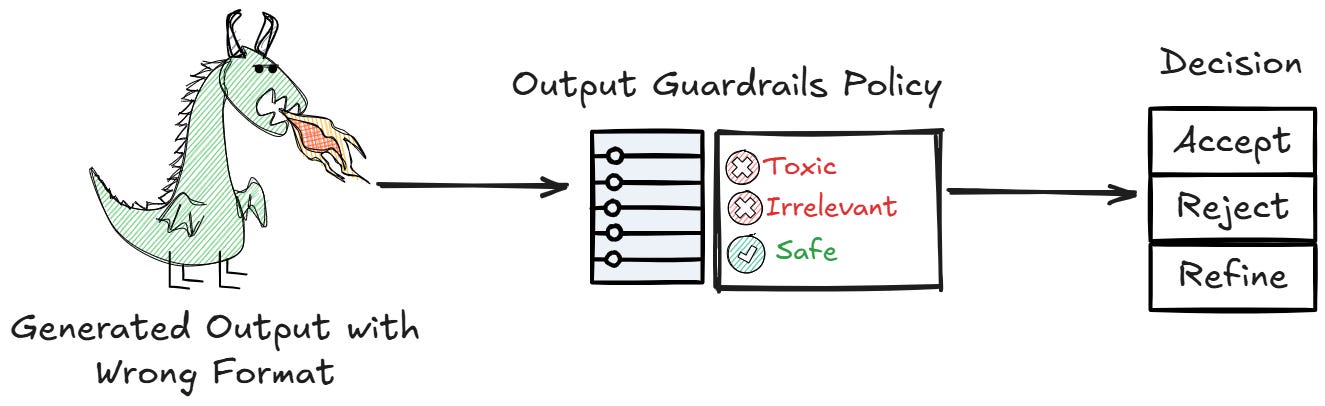

2. Outputs Guardrail

Like the Inputs Guardrail, we want to ensure the generated response is up to our standard, which is usually safe, accurate, and ethically correct. This is where Output Guardrail mitigates the response before reaching the end user.

Output Guardrails often try to act upon the following, but not limited to:

Detecting and filtering toxic or harmful content in the generated response.

Verifying the factual accuracy of the response against trusted sources.

Ensuring the response is ethically aligned and does not promote harmful behavior.

Leveraging the Output Guardrails will become the last stand before the generated text is presented to the user, so we need to build them as finely as possible.

For example, we can have an Output Guardrail that ensures the length of the generated text is always within the allowed length.

def ensure_length_limit(response, max_length=200):

"""

Ensure the generated response does not exceed the specified length limit.

"""

try:

if len(response) > max_length:

raise ValueError(f"Generated response exceeds the length limit of {max_length} characters.")

return response

except Exception as e:

return f"Length limit guardrail error: {e}"We use the function above to pass the generated response for alert if the

search_results = semantic_search(query)

context = "\n".join(search_results['documents'][0])

response = generate_response(query, context)

result = ensure_length_limit(response, max_length=100)

print(result)Output>>

Length limit guardrail error: Generated response exceeds the length limit of 100 characters.Having the result above, we can see that the Guardrail is working properly. Just like the Input Guardrail, you can also control how the generated output should be.

3. Adversarial Protection

The world is not ideal, and many bad people exist. If we open our RAG system to the world, bad people may want to exploit it.

Adversarial protection detects and blocks malicious inputs or attacks that attempt to exploit vulnerabilities in the system. This includes:

Detecting unusual patterns or special characters in queries.

Blocking attempts to manipulate the system’s behavior or extract sensitive information.

With adversarial protection, we can try to detect any malicious attempt on our RAG systems and mitigate anything that will happen.

For example, we use RegEx to detect if any input contains special characters or scripts that should not be there.

def detect_adversarial_query(query):

"""

Detect adversarial queries with unusual patterns or special characters.

"""

try:

if re.search(r"[^\w\s.,?]", query): # Check for special characters

raise ValueError("Query contains potentially adversarial patterns.")

return True

except Exception as e:

return f"Adversarial protection error: {e}"With the function above, we pass a query with a special character that might have malicious intent.

# Example query

query = "What is the insurance for car? <script>alert('XSS')</script>"

result = detect_adversarial_query(query)

print(result)Output>>

Adversarial protection error: Query contains potentially adversarial patterns.The presence of <script> looks strange, and we raise an error for the RAG protection system. You can always increase the measurement to avoid any attack that happens, such as a prompt injection attack

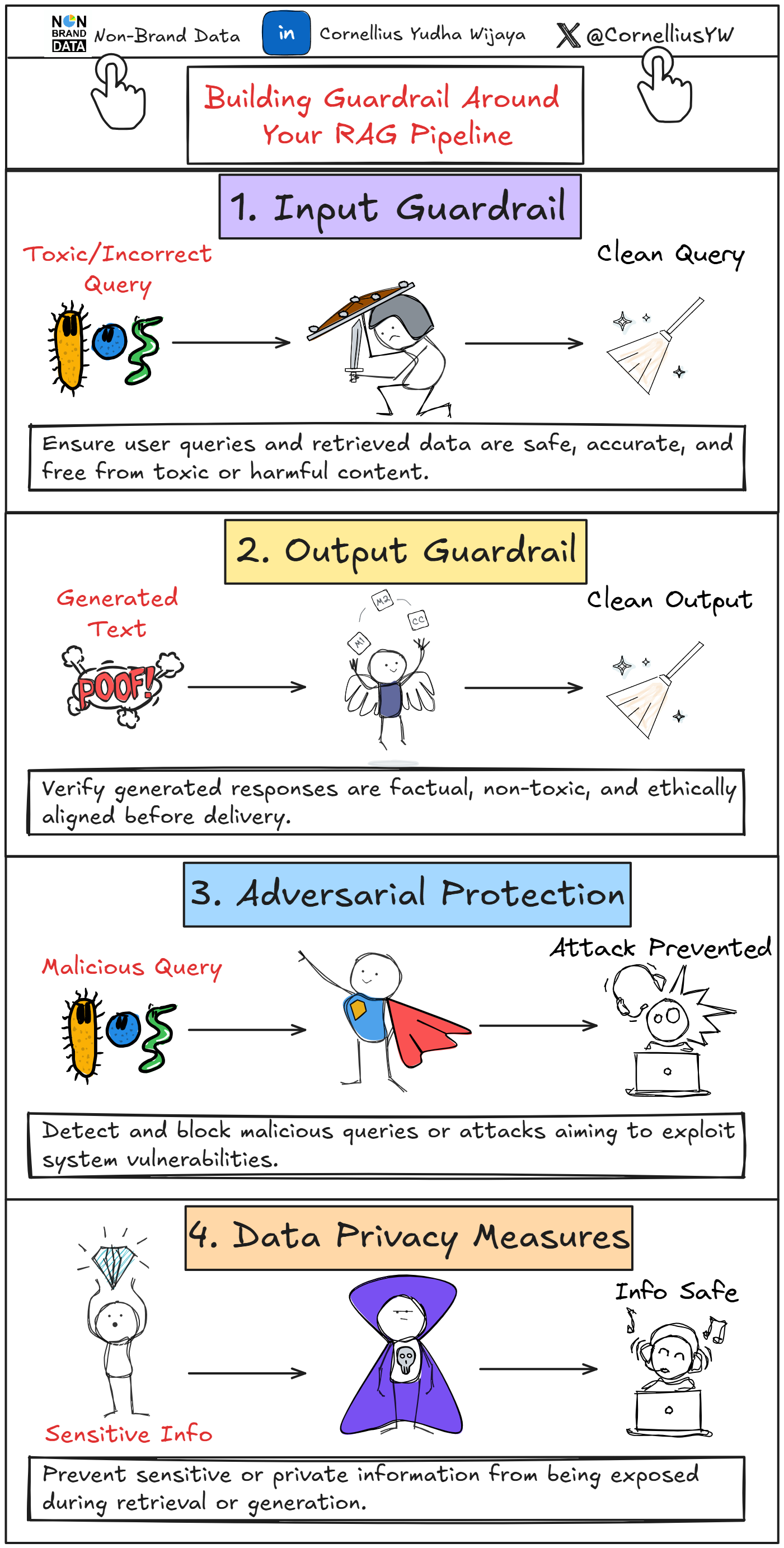

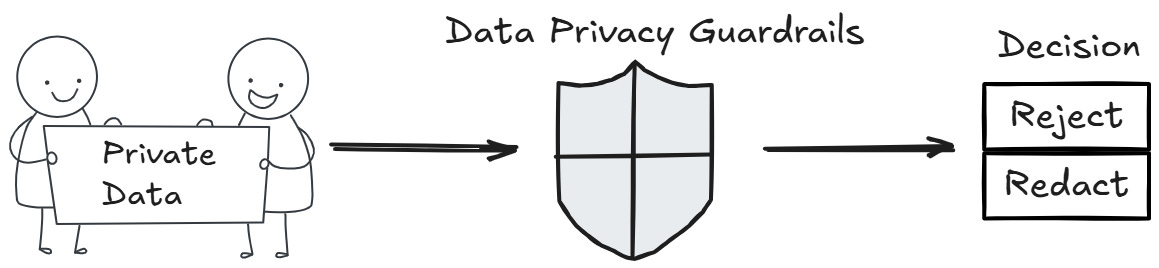

4. Data Privacy Measures

In general, input and output are two of the most damaging aspects of RAG systems if data leakage occurs within them.

Data privacy measures ensure that sensitive or private information is not exposed during retrieval or generation. This includes:

Detecting and redacting personally identifiable information (PII) in retrieved data or generated responses.

Ensuring compliance with data protection regulations (e.g., GDPR, CCPA).

We want to control that the input, retrieval, and generated output do not contain any private data that the system or user should be able to access.

For example, we will use the Microsoft Presidio Library to detect personal information in the text and redact private information.

def detect_and_redact_pii(text):

"""

Use Presidio to detect and redact PII in the text.

"""

try:

analyzer = AnalyzerEngine()

anonymizer = AnonymizerEngine()

results = analyzer.analyze(text=text, language="en")

anonymized_text = anonymizer.anonymize(text=text, analyzer_results=results)

return anonymized_text.text

except Exception as e:

return f"Data privacy error: {e}"Then, we will try to pass text with any private information.

context = "John Doe lives at Street 123 Main and his phone number is +62808011111."

# Detect and redact PII in the context

result = detect_and_redact_pii(context)

print(result)Output>>

<PERSON> lives at <LOCATION> and his phone number is <NRP>.With the functions above, we still let the output be present, but all the private information is redacted. This ensures that no private information is present in any form.

That’s all for the basic explanation of RAG Guardrail; we can combine them all together to create a basic RAG pipeline with the Guardrail present.

def secure_rag_pipeline(query):

"""

Secure RAG Pipeline with integrated guardrails.

Raises exceptions if any guardrail is triggered.

"""

# Step 1: Input Guardrails

validate_input(query) # Raises ValueError if the query is toxic

detect_adversarial_query(query) # Raises ValueError if the query is adversarial

# Step 2: Perform semantic search

search_results = semantic_search(query)

context = "\n".join(search_results['documents'][0])

# Step 3: Data Privacy Measures

safe_context = detect_and_redact_pii(context) # Raises ValueError if PII detection fails

# Step 4: Generate response

response = generate_response(query, safe_context)

# Step 5: Ensure response does not exceed the length limit

limited_response = ensure_length_limit(response, max_length=1000) # Raises ValueError if the response is too long

# Step 6: Return the final safe and length-limited response

return limited_responseI hope it has helped!

Is there anything else you’d like to discuss? Let’s dive into it together!

👇👇👇