Does SMOTE Add Noise to Imbalanced Data? - NBD Lite #25

Taking a look at the popular oversampling method

An imbalanced dataset is always a problem in tabular classification, but it naturally occurs.

There are many Imbalance cases, such as Fraud cases, cancer patients, spam emails, etc., to name a few.

In truth, Finding a pure, balanced dataset in real-world cases is harder. So, we need to be content with the imbalances that occur.

However, higher imbalance intensity would skew the machine learning model’s ability to correctly classify the minority class, leading to poor model performance.

That’s usually where we taught to oversampling the data, especially with the Synthetic Minority Over-sampling Technique (SMOTE) method.

But is SMOTE always beneficial? Or can it introduce noise? Let’s explore it together!

What is SMOTE?

As I have mentioned previously, SMOTE is an oversampling method.

In a classic oversampling technique, the minority data is duplicated from the minority data population.

While it increases the number of data, it does not give any new information or variation to the machine learning model.

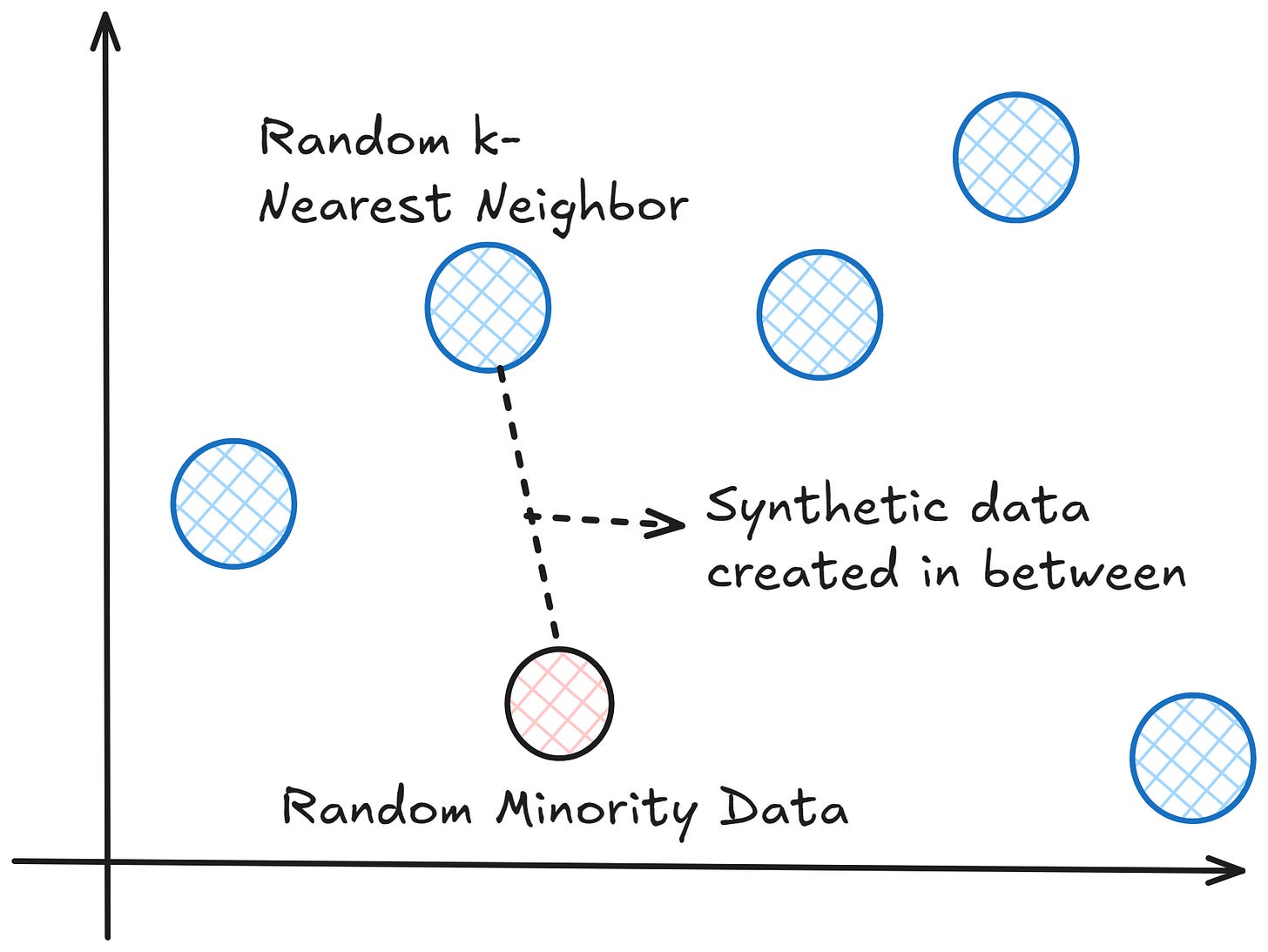

SMOTE works by utilizing a k-nearest neighbour algorithm to create synthetic data.

SMOTE starts by choosing random data from the minority class, and then k-nearest neighbours from the data are set.

Synthetic data would then be made between the random data and the randomly selected k-nearest neighbor.

The procedure is repeated enough times until the minority class has the same proportion as the majority class.

As popular as the method is, SMOTE doesn’t always work. Let’s try to experiment with SMOTE.

When Does SMOTE Work and Does Not

Before starting the experiment, let’s discuss the theoretical advantages and disadvantages of using SMOTE.

In general, the advantages of using SMOTE for imbalance cases are:

SMOTE helps balance the class distribution, improving the model's ability to generalize and predict minority classes accurately.

Many classifiers struggle with imbalanced data, which is often biased toward the majority class. SMOTE can help improve recall and precision.

In cases of severe imbalance, SMOTE can be a better option than simple random oversampling as it prevents exact duplicates of data points, creating more diversity in the training set.

But, there are some disadvantages of SMOTE:

SMOTE creates synthetic data based on existing samples. If the original data is noisy or complex, it can introduce noise into the model, which may hurt performance.

SMOTE could generate synthetic points that are too similar to existing data, leading to overfitting.

SMOTE can sometimes create synthetic data that doesn’t make sense in the real world, as it interpolates from existing data instead of replicating them.

With a few good and bad things that SMOTE can do, we would experiment with how the difference in balance ratios can affect the model performance—with or without SMOTE.

import matplotlib.pyplot as plt

from imblearn.over_sampling import SMOTE

from sklearn.datasets import make_classification

from sklearn.ensemble import RandomForestClassifier

from sklearn.model_selection import train_test_split

from sklearn.metrics import precision_score, recall_score, f1_score

def train_and_get_metrics(X, y, smote=False):

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.3, random_state=42)

if smote:

sm = SMOTE(random_state=42)

X_train, y_train = sm.fit_resample(X_train, y_train)

clf = RandomForestClassifier(random_state=42)

clf.fit(X_train, y_train)

y_pred = clf.predict(X_test)

precision = precision_score(y_test, y_pred)

recall = recall_score(y_test, y_pred)

f1 = f1_score(y_test, y_pred)

return precision, recall, f1

# Generate datasets with different balance ratios

datasets = [

("50%/50%", *make_classification(n_samples=1000, n_features=20, weights=[0.5, 0.5], random_state=42)),

("60%/40%", *make_classification(n_samples=1000, n_features=20, weights=[0.6, 0.4], random_state=42)),

("70%/30%", *make_classification(n_samples=1000, n_features=20, weights=[0.7, 0.3], random_state=42)),

("80%/20%", *make_classification(n_samples=1000, n_features=20, weights=[0.8, 0.2], random_state=42)),

("90%/10%", *make_classification(n_samples=1000, n_features=20, weights=[0.9, 0.1], random_state=42))

]

metrics_without_smote = []

metrics_with_smote = []

for name, X, y in datasets:

metrics_without_smote.append(train_and_get_metrics(X, y, smote=False))

metrics_with_smote.append(train_and_get_metrics(X, y, smote=True))

# Plotting the results

precision_without, recall_without, f1_without = zip(*metrics_without_smote)

precision_with, recall_with, f1_with = zip(*metrics_with_smote)

labels = ["50/50", "60/40", "70/30", "80/20", "90/10"]

x = range(len(labels))

plt.figure(figsize=(15, 8))

# Plot Precision

plt.subplot(1, 3, 1)

plt.plot(x, precision_without, label='Without SMOTE', marker='o')

plt.plot(x, precision_with, label='With SMOTE', marker='o')

plt.xticks(x, labels, rotation=45)

plt.title('Precision')

plt.legend()

# Plot Recall

plt.subplot(1, 3, 2)

plt.plot(x, recall_without, label='Without SMOTE', marker='o')

plt.plot(x, recall_with, label='With SMOTE', marker='o')

plt.xticks(x, labels, rotation=45)

plt.title('Recall')

# Plot F1 Score

plt.subplot(1, 3, 3)

plt.plot(x, f1_without, label='Without SMOTE', marker='o')

plt.plot(x, f1_with, label='With SMOTE', marker='o')

plt.xticks(x, labels, rotation=45)

plt.title('F1 Score')

plt.tight_layout()

plt.show()The graph shows that precision with SMOTE suffers, especially in more severe imbalance cases.

In contrast, the Recall is better with the SMOTE technique.

This phenomenon occurs because SMOTE increases the number of minority class samples, making it easier to classify the minority classes in the model.

However, SMOTE might create synthetic data that does not represent real-world distribution or become noise. The noisy pattern can also be amplified.

These noisy points could be borderline or overlap with majority class data, leading to more false positives, which decrease precision.

Of course, the phenomenon does not always happen all the time, as it depends on the data quality and existing features as well.

Additionally, the more balanced your dataset is, the more unnecessary SMOTE is, as the synthetic data would only become noisy.

In conclusion, use SMOTE if:

You have more moderate imbalance cases,

We could accept that a trade-off between precision and recall might happen.

Additionally, remember that the synthetic point might not occur in real-world data.

Alternative to SMOTE

You can use many alternative methodologies for the imbalance cases, including:

Class Weighting: This algorithm penalizes misclassifications of minority classes without altering the results.

Cost-Sensitive Loss Functions: Loss functions that penalize errors committed by minority groups more severely.

Tomek Links: Cleans up the data by removing noisy data close to the decision boundary.

There are many more alternatives; we could discuss them if you are interested.

That’s all a quick explanation about how SMOTE could introduce noise.

Are there any more things you would love to discuss? Let’s talk about it together!

👇👇👇