Implement Explainable Boosting Machines (EBMs) for Interpretable Models - NBD Lite #39

Leverage the direct explainability model for the business

Machine Learning models can be untrustworthy for business people as the result often isn’t understood enough by them.

We can have the best model, but it would be useless if businesses did not use it. The best way to relate to companies is to explain why our model provides certain output.

In our previous NBD Lite series, we discussed techniques to interpret our machine learning model with PDPs, which you can read below.

In this article, we will discuss another practical way for model explainability, which is the Explainable Boosting Machines (EBMs).

So, what are EBMs, and how can they help you? Let’s explore it together!

Explainable Boosting Machines

Explainable Boosting Machines (EBMs) is a machine learning algorithm developed by the InterpretML team as a glassbox model—a model designed for interpretability.

EBMs is a tree-based, cyclic gradient-boosting Generalized Additive Model with automatic interaction detection. It is designed to have accuracy comparable with the Random Forest and Boosted Trees model but directly interpretable.

EBMs are easily interpretable as the contribution of each feature to a final prediction can be visualized.

Let’s try it out with the Python code. First, we need to install the InterpretML package with the following code.

pip install interpretWith the package installed, we will import all the necessary packages and load the sample dataset.

from interpret.glassbox import ExplainableBoostingClassifier

from interpret import show

from sklearn.model_selection import train_test_split

from sklearn.metrics import accuracy_score

import pandas as pd

import seaborn as sns

import matplotlib.pyplot as plt

data = sns.load_dataset('titanic')

data = data.dropna()

X = data[['pclass', 'age', 'sibsp', 'fare', 'adult_male']]

y = data['survived']

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.2, random_state=42)With the dataset ready, let’s try to fit the EBM classification model into the training data.

ebm = ExplainableBoostingClassifier(random_state=42)

ebm.fit(X_train, y_train)

y_pred = ebm.predict(X_test)

print("Accuracy:", accuracy_score(y_test, y_pred))Accuracy: 0.757

The evaluation shows that the result is quite good, but it could be better. However, the article will only address the explainability part, as it’s important to know.

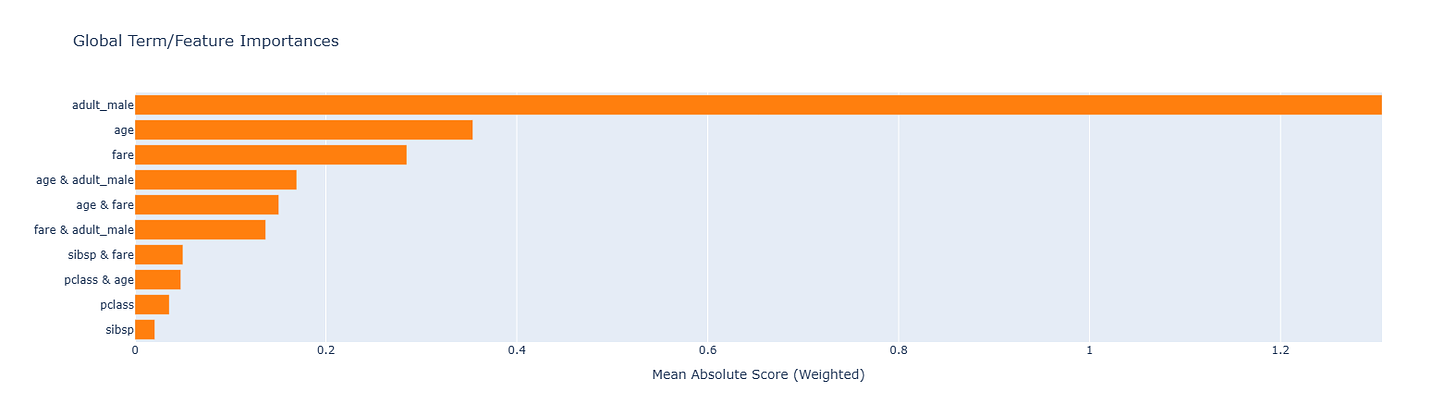

In the code below, we will explore the global explainability to understand the features' overall contribution to the model.

ebm_global = ebm.explain_global()

show(ebm_global)In EBMs, the feature contribution is calculated based on how much each feature influences the model's prediction across all data in the dataset.

In the chart above, the most important features are adult_male, age, and fare, indicated by their rank at the top, which means they significantly impact the model’s decisions.

Additionally, EBM could capture the presence of interaction terms, such as age & adult_male and age & fare, showing that the model captures complex relationships between pairs of features. This means the model suggests that one feature's effect depends on another's value.

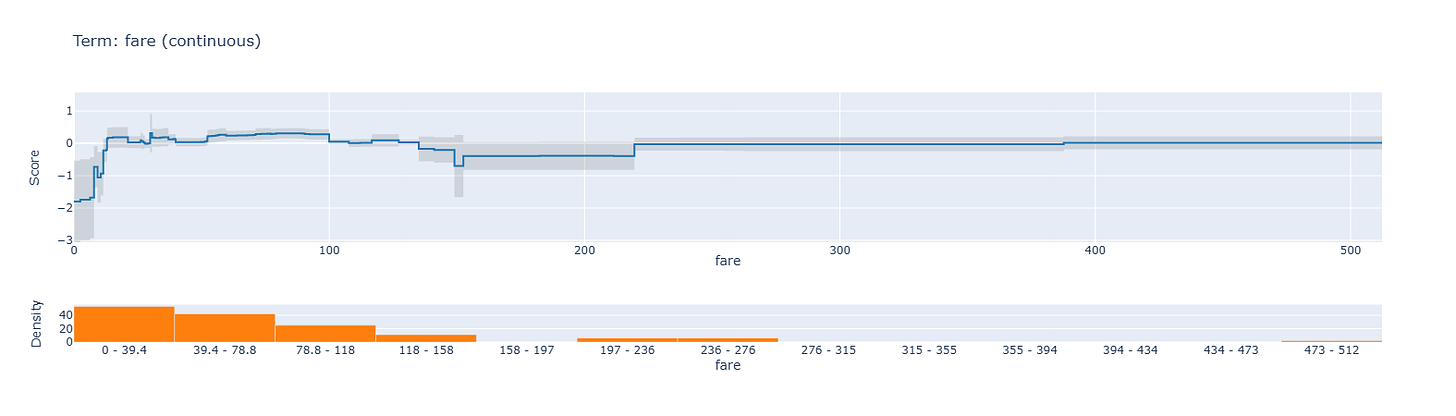

We can try to examine each feature more closely. For example, this is the fare plot contribution.

This plot shows the effect of the fare feature on predictions in the EBM model. The top line graph illustrates the score associated with different fare values. Higher scores increase the probability of survival, while lower scores decrease it.

The bottom bar chart represents the dataset's density or distribution of fare values. It shows that most fares fall below 50, with fewer observations in higher fare ranges.

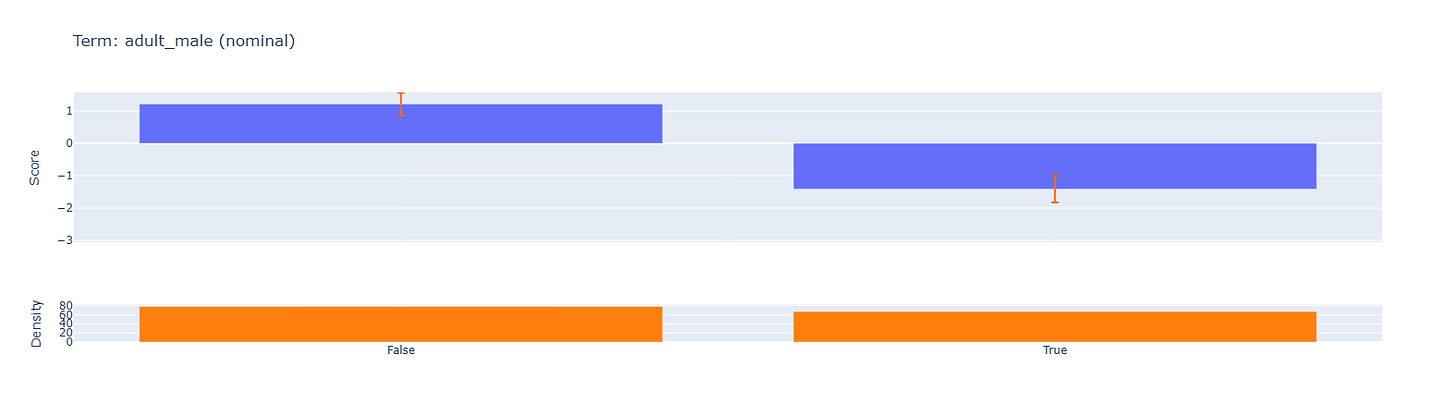

It’s also possible to see the contribution of nominal features such as the adult_male feature.

Like the fare feature, the adult_male top chart shows that the False value contributes more to survival, and the bottom chart shows the data density across the dataset.

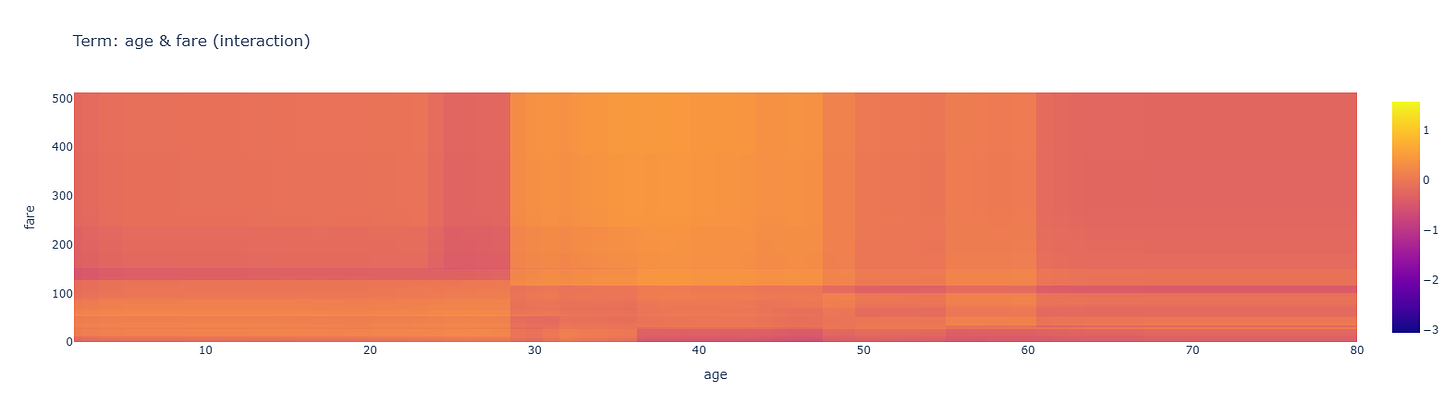

As the feature interaction exists, we can also see the contribution plot. For example, below is the interaction between the age and fare feature.

The right-hand colour scale represents the score for different combinations of age and fare values, with warmer colours (yellow) indicating higher scores (positive impact on the outcome) and cooler colours (purple) indicating lower scores (negative effect).

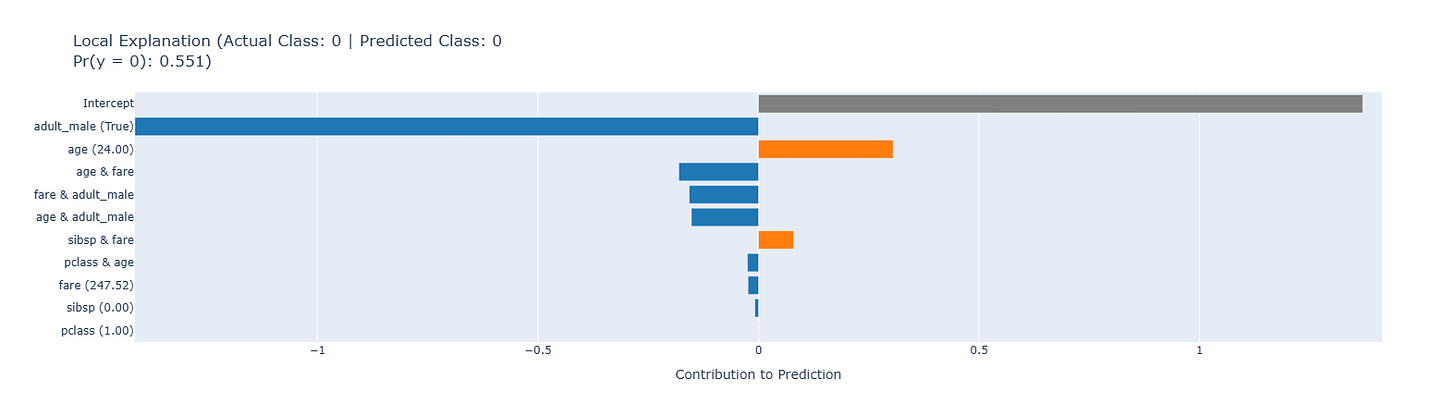

Lastly, we can see individual explanations for each prediction. For example, we take the top 5 of the data and examine the instance explainability.

ebm_local = ebm.explain_local(X_test[:5], y_test[:5])

show(ebm_local)This local explanation plot shows that the model predicts class 0 (probability 0.551), matching the actual class. The strongest reason the prediction pulled towards 0 is adult_male (True), indicating a reduced likelihood for a positive outcome. Overall, the prediction is mainly driven by the negative influence of being an adult male, with minor contributions from the other features.

As an additional point, the intercept is the baseline score or starting point for predictions before any feature contributions are added.

That’s all you need to know about EBM.

Are there any more things you would love to discuss? Let’s talk about it together!

👇👇👇