Introducing DataDreamer: Easy Data Synthetic Generation and LLM Training Workflows Python Package.

Efficiently develop your LLM in a few lines of code

Large Language Model (LLM) has become one of the most used models in the world. The ability to generate text and being a reasoning agent provides the advantage that many models previously didn’t have. One of them is for synthetic text generation that could mimic reality.

Synthetic data has become increasingly valuable, as it could simulate the natural world and allow the model to enhance their capability without spending a lot of money to fine-tune it. Of course, accurate data is still the standard, but it doesn't mean we can’t use synthetic data for many purposes.

Recently, I came across an open-source project called DataDreamer that boasts its strength for prompting, synthetic data generation, and training workflows.

So, how true is that? How can we use DataDreamer in our workflow? Let’s discuss them here.

DataDreamer

DataDreamer is an open-source package that utilizes LLM to generate synthetic data and the subsequent workflow, including enriching and cleaning existing data, fine-tuning the model, and many more. There are many examples of workflow, which you can find here.

In our example, we would try DataDreamer to generate synthetic data of the ArXiv NLP Research Abstract and generate a LinkedIn post with this data. The data would then be used to fine-tune a HuggingFace transformers model that could generate the LinkedIn post text.

The first thing to do is install the package.

pip install datadreamer.devNext, what we would do is set up the DataDreamer session. In general, the session is the first thing we need to initiate to perform the activity. The code looks like this.

from datadreamer import DataDreamer

with DataDreamer('./output/'):

#running the stepsWe would need the LLM from any text generation model we can use to perform the tasks, such as GPT -4. However, this example would use the HuggingFace transformers because the GPT-4 model would charge too much for a simple example.

First, let’s see how the synthetic dataset generation happens with DataDreamer. In the code below, we generate synthetic data of the ArXiv NLP Research Abstract (DataFromPrompt) and dataset of LinkedIn posts (ProcessWithPrompt) based on the generated data. To check the data, we would also convert the results to CSV to explore them.

We would also use the open-source GPT2 model to generate the text for this example. For simplicity, we also generate 50 data rows only. The whole code would be similar to the one below.

from datadreamer import DataDreamer

from datadreamer.llms import HFTransformers

from datadreamer.steps import DataFromPrompt, ProcessWithPrompt

with DataDreamer("./output"):

llm_model = HFTransformers(model_name="openai-community/gpt2")

# Generate synthetic arXiv-style research paper abstracts with GPT-2

arxiv_dataset = DataFromPrompt(

"Generate Research Paper Abstracts",

args={

"llm": llm_model,

"n": 50,

"temperature": 1.2,

"instruction": (

"Generate an arXiv abstract of an NLP research paper."

" Return just the abstract, no titles."

),

},

outputs={"generations": "abstracts"},

)

abstracts_and_post = ProcessWithPrompt(

"Generate LinkedIn post from Abstracts",

inputs={"inputs": arxiv_dataset.output["abstracts"]},

args={

"llm": llm_model,

"instruction": (

"Given the abstract, write a LinkedIn post to summarize the work."

),

"top_p": 1.0,

},

outputs={"inputs": "abstracts", "generations": "linkedin_post"},

)

arxiv_dataset.export_to_csv('arxiv_dataset.csv')

abstracts_and_post.export_to_csv('linkedin_post.csv')In the code above, we have 4 steps to generate synthetic data and save them into CSV files.

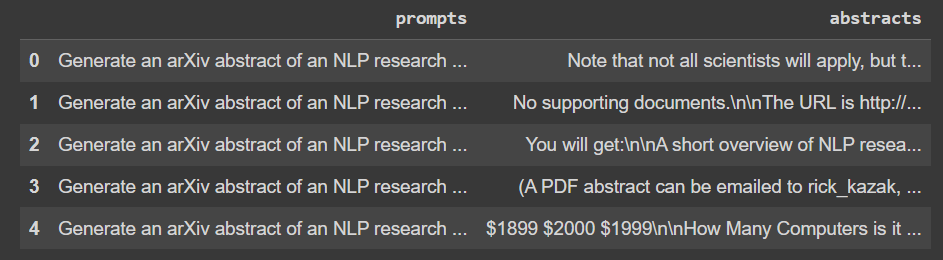

Let’s take a look at our generated data. First, let’s see the ArXiv synthetic dataset.

import pandas as pd

df_arxiv = pd.read_csv('arxiv_dataset.csv')

df_arxiv.head()I am sure that the result is not that good. This is because we use the GPT-2, which can still not provide a precise result based on our instruction. Nevertheless, we can see that it’s easy for DataDreamer to generate synthetic data.

Let’s see the LinkedIn post dataset.

df_linkedin = pd.read_csv('linkedin_post.csv')

df_linkedin.head()From the result, I still see a problem with the posts' poor quality.

Nevertheless, DataDreamer could easily generate the synthetic data. With a better model, we could have much more reliable data.

Lastly, we could try to train the transformers model based on the synthetic dataset we just generated. To do that, we would expand our previous code to train and publish the model.

Before that, we must generate an API Access token for the HuggingFace. Go to the web and generate an access token for the Write role.

Then you must log in to your HuggingFace accounts with the following code.

from huggingface_hub import notebook_login

notebook_login()For this example, we would fine-tune the Google T5 small base model and publish them to the intended path. Change your HuggingFace path (‘yourhfpath’) to make sure it’s published in the correct place.

Before executing the code, make sure that you already create a repository in the HuggingFace for the Dataset and Model.

from datadreamer import DataDreamer

from datadreamer.llms import HFTransformers

from datadreamer.steps import DataFromPrompt, ProcessWithPrompt

from datadreamer.trainers import TrainHFFineTune

from peft import LoraConfig

with DataDreamer("./output"):

llm_model = HFTransformers(model_name="openai-community/gpt2")

arxiv_dataset = DataFromPrompt(

"Generate Research Paper Abstracts",

args={

"llm": llm_model,

"n": 50,

"temperature": 1.2,

"instruction": (

"Generate an arXiv abstract of an NLP research paper."

" Return just the abstract, no titles."

),

},

outputs={"generations": "abstracts"},

)

abstracts_and_post = ProcessWithPrompt(

"Generate LinkedIn post from Abstracts",

inputs={"inputs": arxiv_dataset.output["abstracts"]},

args={

"llm": llm_model,

"instruction": (

"Given the abstract, write a LinkedIn post to summarize the work."

),

"top_p": 1.0,

},

outputs={"inputs": "abstracts", "generations": "linkedin_post"},

)

splits = abstracts_and_post.splits(train_size=0.90, validation_size=0.10)

trainer = TrainHFFineTune(

"Train an Abstract => LinkedIn Post Model",

model_name="google/t5-v1_1-base",

peft_config=LoraConfig(),

)

trainer.train(

train_input=splits["train"].output["abstracts"],

train_output=splits["train"].output["linkedin_post"],

validation_input=splits["validation"].output["abstracts"],

validation_output=splits["validation"].output["linkedin_post"],

epochs=5,

batch_size=8,

)

abstracts_and_post.publish_to_hf_hub(

"yourhfpath/abstracts_and_post",

train_size=0.90,

validation_size=0.10,

)

trainer.publish_to_hf_hub("yourhfpath/abstracts_to_post_model")

If all the processes are going well, you can see the model and dataset published in your respective repository.

It’s pretty easy to use, right? DataDreamer streamlines all the complicated code into simple, intuitive steps.

There is still so much you can do with DataDreamer. I suggest that you visit the documentation to learn further.

Conclusion

DataDreamer is an open-source Python that streamlines the process of synthetic data generation and LLM training workflows in several lines of code. It’s easy to use and highly versatile, so it would be perfect for any use cases you have.