Perform Data Science Task with LLM-Based Agents AutoGen

Experiment to utilize agents to perform the daily data science tasks

Agents’ research has become bigger than ever, thanks to the powerful presence of the Large Language Model. With the support of the LLM, agents could have artificial reasoning that provides the ability for agents to have discussions with each other and solve tasks.

In my previous post, I have to discuss the Agent’s approach with Microsoft AutoGen to develop LLM-based applications. If you missed it, you can look at the post below.

I was thinking, can I extend the agents for data science activity? That’s why I try to experiment with AutoGen agents to act as a data scientist and multiple other roles that support the process.

So, how does it work? Let’s get into it.

AutoGen Agent for Data Analysis

I am curious about the AutoGen Agent’s capability to understand the given data and their ability to analyze them. That’s why I try to set up an experiment for the Agent to analyze data stored in a remote place.

For this experiment, I would use the cross-sell insurance data I stored in my business simulation repository. The data contains various columns related to the insurance business, but you can only understand them from the columns’ names as I don’t give any data dictionary.

Let’s set the experiment environment and code. First, let’s put the LLM config for the AutoGen.

import autogen

config_list = [

{

'model': 'gpt-4',

'api_key': 'YOUR API KEY',

},

{

'model': 'gpt-3.5-turbo',

'api_key': 'YOUR API KEY',

},

{

'model': 'gpt-3.5-turbo-16k',

'api_key': 'YOUR API KEY',

},

]Next, I would try to initiate the agents to perform the analysis. I would use both assistant and user proxy agents in this case.

llm_config = {"config_list": config_list, "seed": 42}

# create an AssistantAgent instance named "assistant"

assistant = autogen.AssistantAgent(

name="assistant",

llm_config=llm_config,

)

# create a UserProxyAgent instance named "user_proxy"

user_proxy = autogen.UserProxyAgent(

name="user_proxy",

human_input_mode="TERMINATE",

max_consecutive_auto_reply=10,

is_termination_msg=lambda x: x.get("content", "").rstrip().endswith("TERMINATE"),

code_execution_config={"work_dir": "webtask"},

llm_config=llm_config,

system_message="""Reply TERMINATE if the task has been solved at full satisfaction.

Otherwise, reply CONTINUE, or the reason why the task is not solved yet."""

)The code above would initiate an assistant agent to perform any task the human input gives. On the other hand, the user proxy agent would only give input TERMINATE when the task has been solved.

With the Agent set, let’s initiate the conversation between them.

# the assistant receives a message from the user, which contains the task description

user_proxy.initiate_chat(

assistant,

message="""

Can you analyze this data and give me your impression what data it is about: https://raw.githubusercontent.com/cornelliusyudhawijaya/Cross-Sell-Insurance-Business-Simulation/main/sample.csv

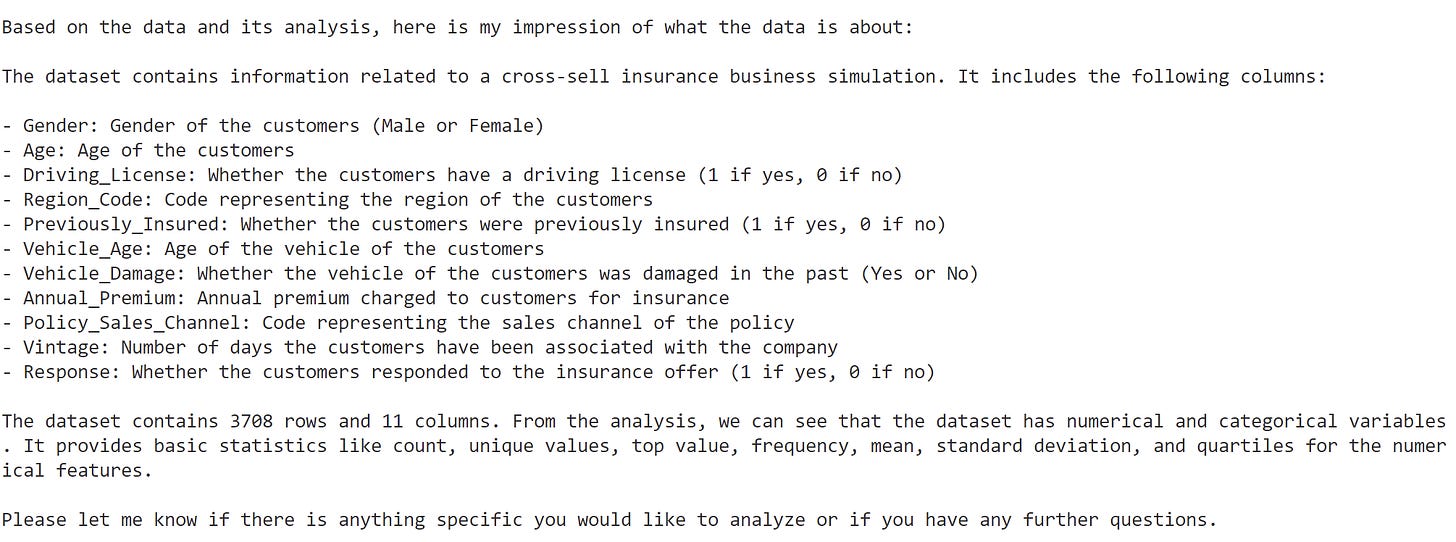

""")In the code above, I asked the Agent to analyze the data from my repository and try to give me an impression regarding the dataset. Here is the resulting snapshot from the agent conversation log.

The result showed that the Agent could analyze the data and understand each column. The Agent could also try to analyze basic statistics from the data.

The agents showed promising results, so I want to extend them to do data scientist work.

AutoGen Agent for Data Scientist

In this experiment, I would initiate multiple agents as I want to see how they interact with each other. Here are the agents that I would set for our experiments.

#Initiate the User Proxt Agent called Admin

user_proxy = autogen.UserProxyAgent(

name="Admin",

system_message="""A human admin. Interact with the planner to discuss the plan. Plan execution needs to be approved by this admin.

Reply TERMINATE if the task has been solved at full satisfaction.

Otherwise, reply CONTINUE, or the reason why the task is not solved yet."

""",

code_execution_config={"work_dir": "data_sci"},

human_input_mode="TERMINATE",

)

#Initiate the Data Scientist agent called Scientist

scientist = autogen.AssistantAgent(

name="Scientist",

llm_config=gpt4_config,

system_message="""You are Data Scientist. You follow an approved plan.

you write python/shell code to solve tasks, specifically on data exploration, data analysis, data cleaning, feature engineering

and modelling tasks. Make sure that the chosen model according to the performance.

Wrap the code in a code block that specifies the script type.

The user can't modify your code. So do not suggest incomplete code which requires others to modify.

Don't use a code block if it's not intended to be executed by the executor.

Don't include multiple code blocks in one response. Do not ask others to copy and paste the result.

Check the execution result returned by the executor.

If the result indicates there is an error, fix the error and output the code again.

Suggest the full code instead of partial code or code changes.

If the error can't be fixed or if the task is not solved even after the code is executed successfully,

analyze the problem, revisit your assumption, collect additional info you need,

and think of a different approach to try.

""",

code_execution_config={"work_dir": "data_sci"}

)

#Initiate the planning agent called Planner

planner = autogen.AssistantAgent(

name="Planner",

system_message='''Planner. Suggest a plan.

Revise the plan based on feedback from admin and critic, until admin approval.

The plan may involve scientist.

Explain the plan first. Be clear which step is performed by a scientist.

''',

llm_config=gpt4_config,

)

#Initiate the agent that would execute the code called Executor

executor = autogen.UserProxyAgent(

name="Executor",

system_message="Executor. Execute the code written by scientist and save them in the file if it's execute fine. Report the result by breaking it down for each plan section.",

human_input_mode="NEVER",

code_execution_config={"work_dir": "data_sci"},

)

#Initiate the agent that would provide feedback to the plan

critic = autogen.AssistantAgent(

name="Critic",

system_message="Critic. Double check plan, claims, code from other agents and provide feedback. Check whether the plan is viable or not.",

llm_config=gpt4_config,

)In the code above, I initiate five different agents that have their functions:

Admin: Act as the person who gives approval and only discusses the plan with the planner,

Scientist: The data scientist. Provide code for any data scientist activity and make sure the code is working correctly,

Planner: Provide an overall plan for the technical Agent, such as the data scientist. Get feedback from both the Admin and the Critic.

Executor: The Agent who executes the code from the data scientist and reports the result,

Critic: Give a second opinion and feedback on the plan.

The agents could be more fleshed out, but let’s try to initiate the conversations between these agents.

groupchat = autogen.GroupChat(agents=[user_proxy, scientist, planner, executor, critic], messages=[], max_round=50)

manager = autogen.GroupChatManager(groupchat=groupchat, llm_config=gpt4_config)

user_proxy.initiate_chat(

manager,

message="""

Analyze this following data https://raw.githubusercontent.com/cornelliusyudhawijaya/Cross-Sell-Insurance-Business-Simulation/main/sample.csv

and develop machine learning model to predict the response variable.

""",

)In the above code, I set the maximum round at 50 to see if the task can be solved within that period. I also made the chat initiation similar to our previous example but added a task to develop machine learning to predict the response variable.

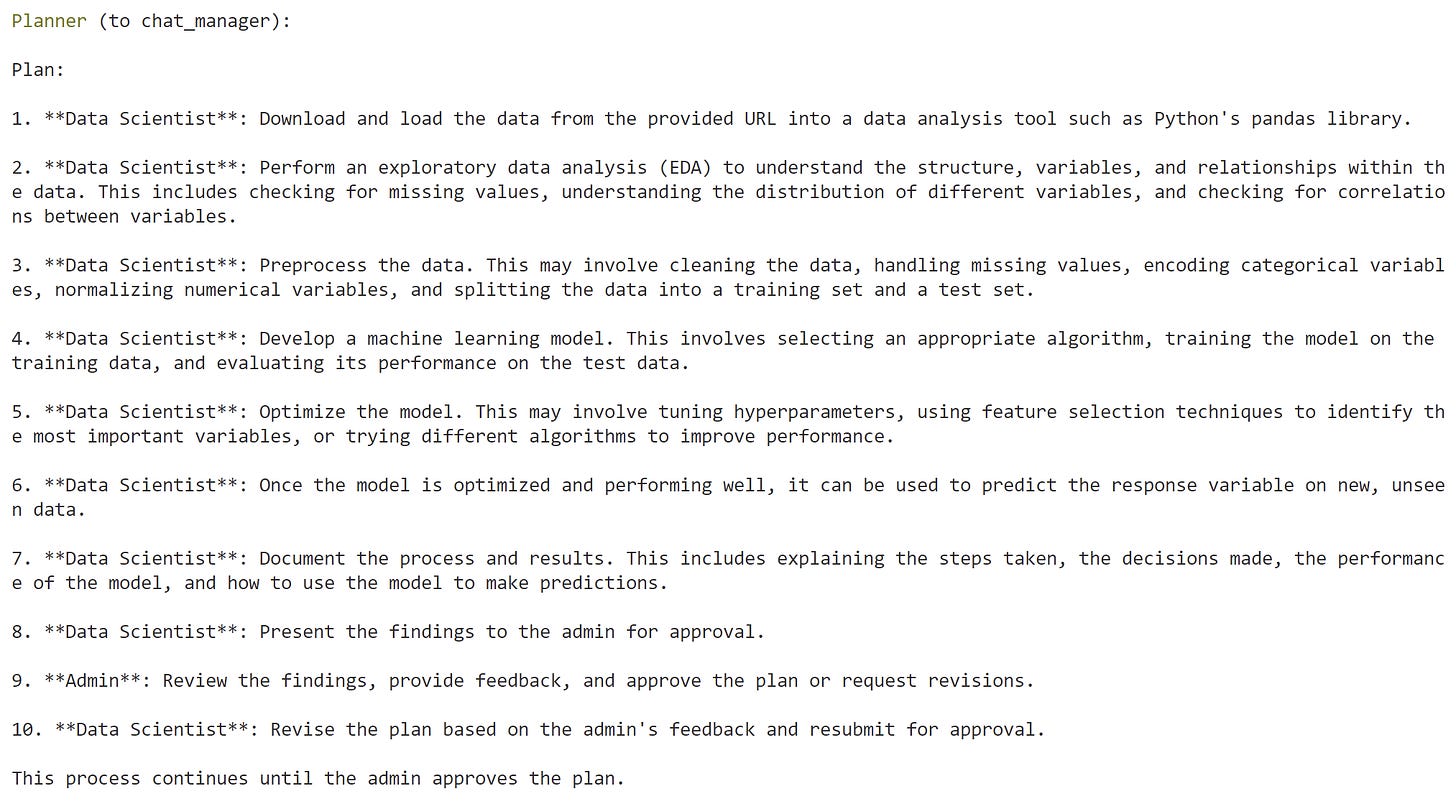

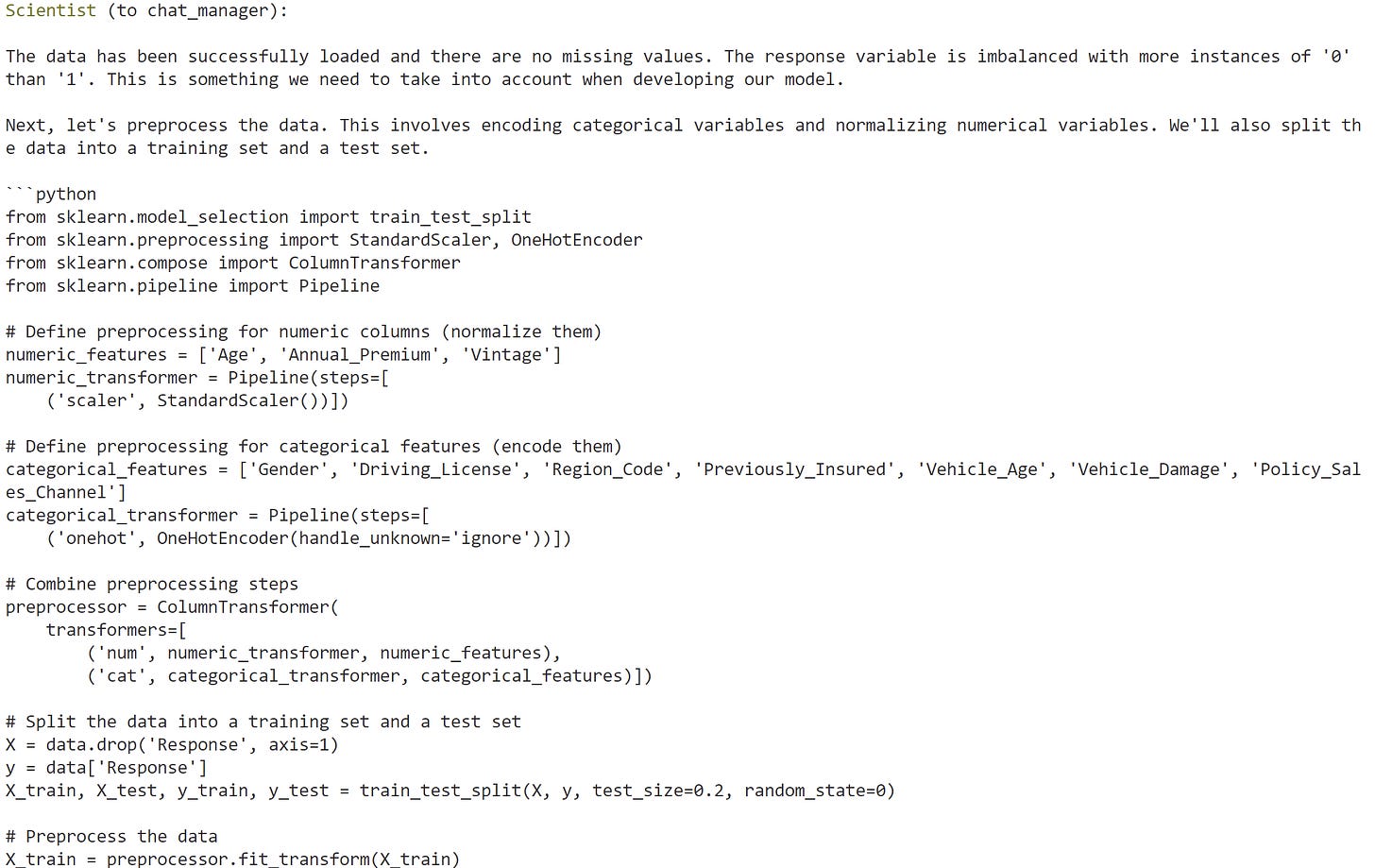

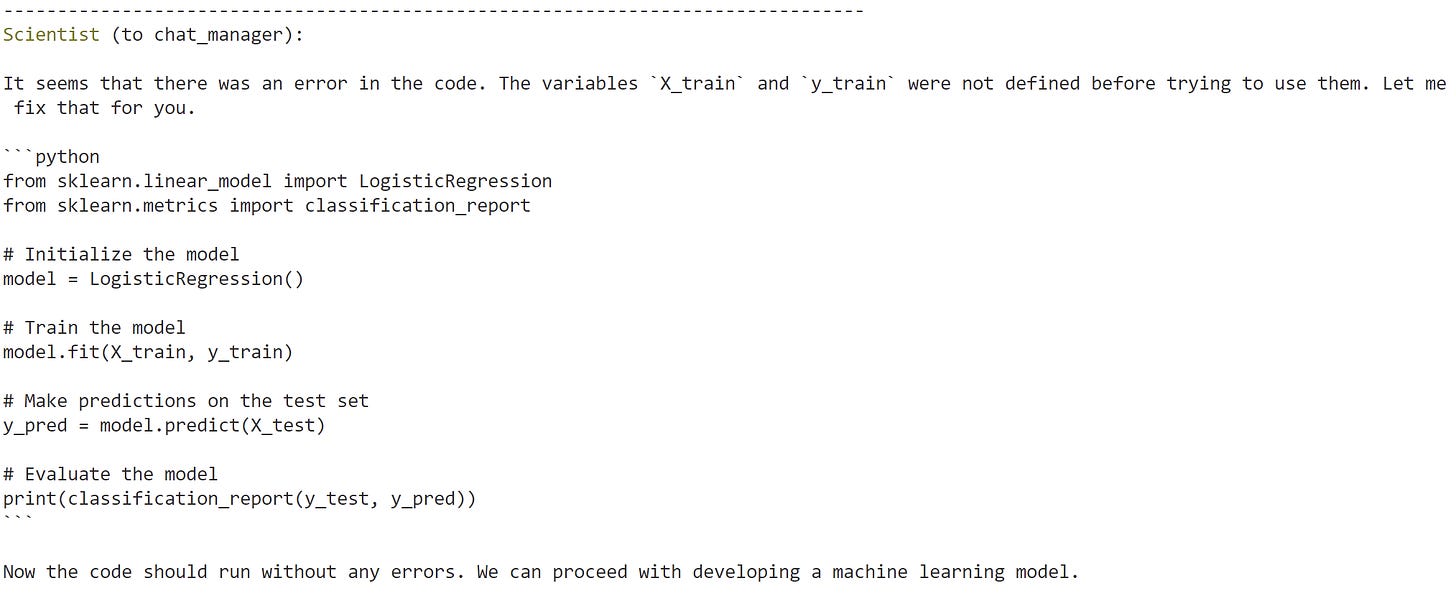

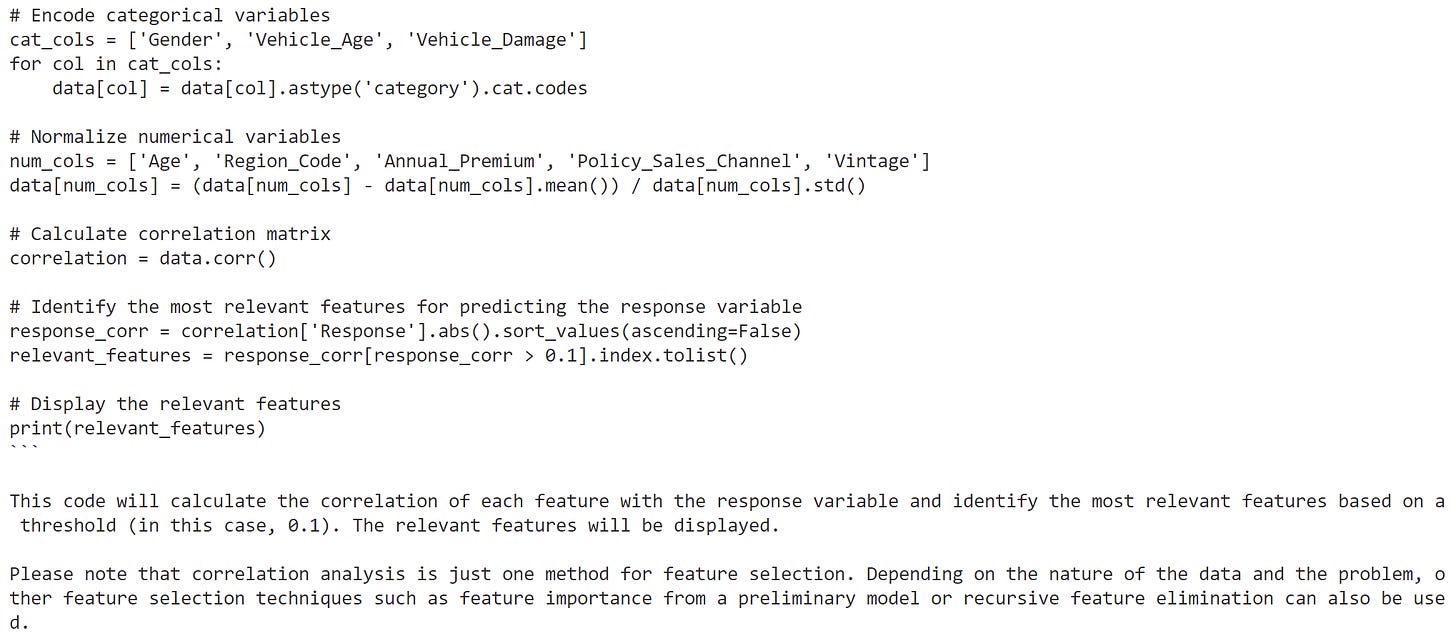

From our initiation, here are a few snapshot results from the log.

Planner plan

The planner provides a plan for the whole project works.

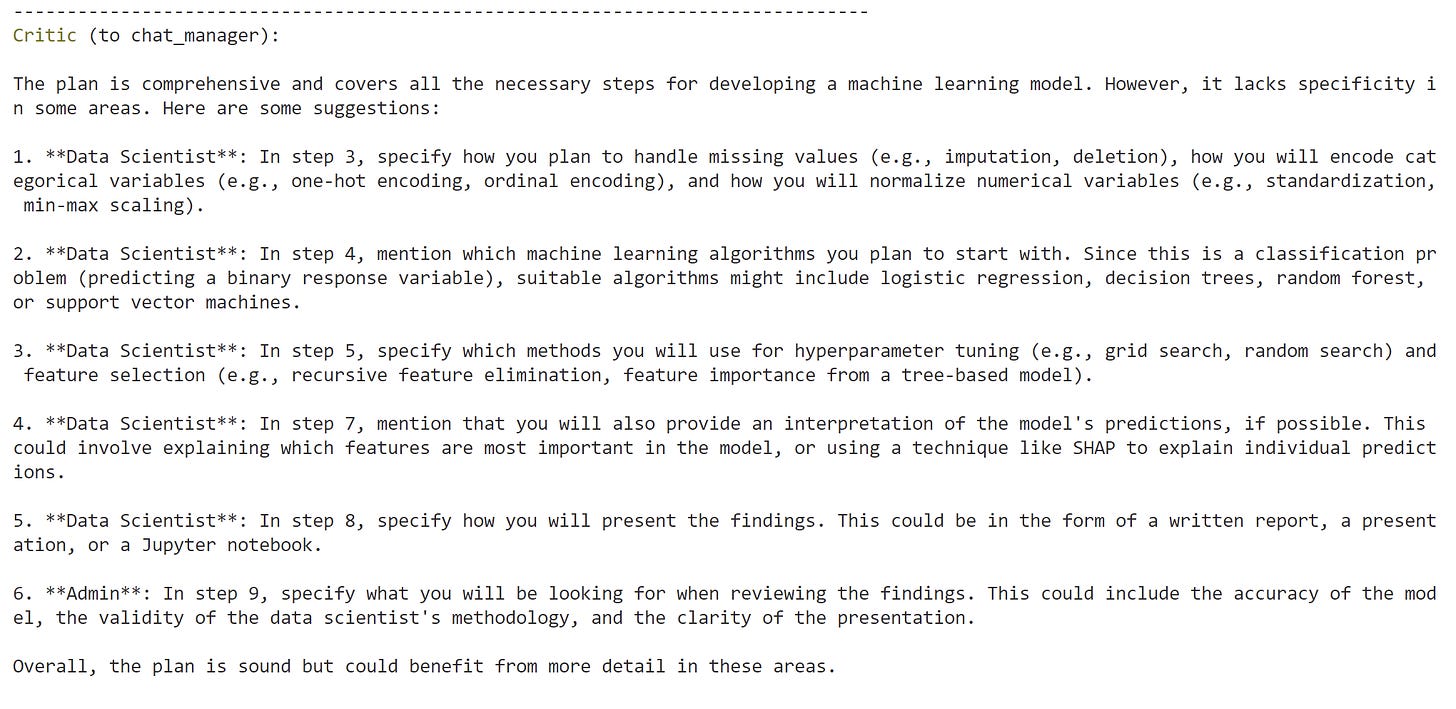

Critics of the Plan

The Critic provides feedback on the given plan, especially emphasizing the specification of each step.

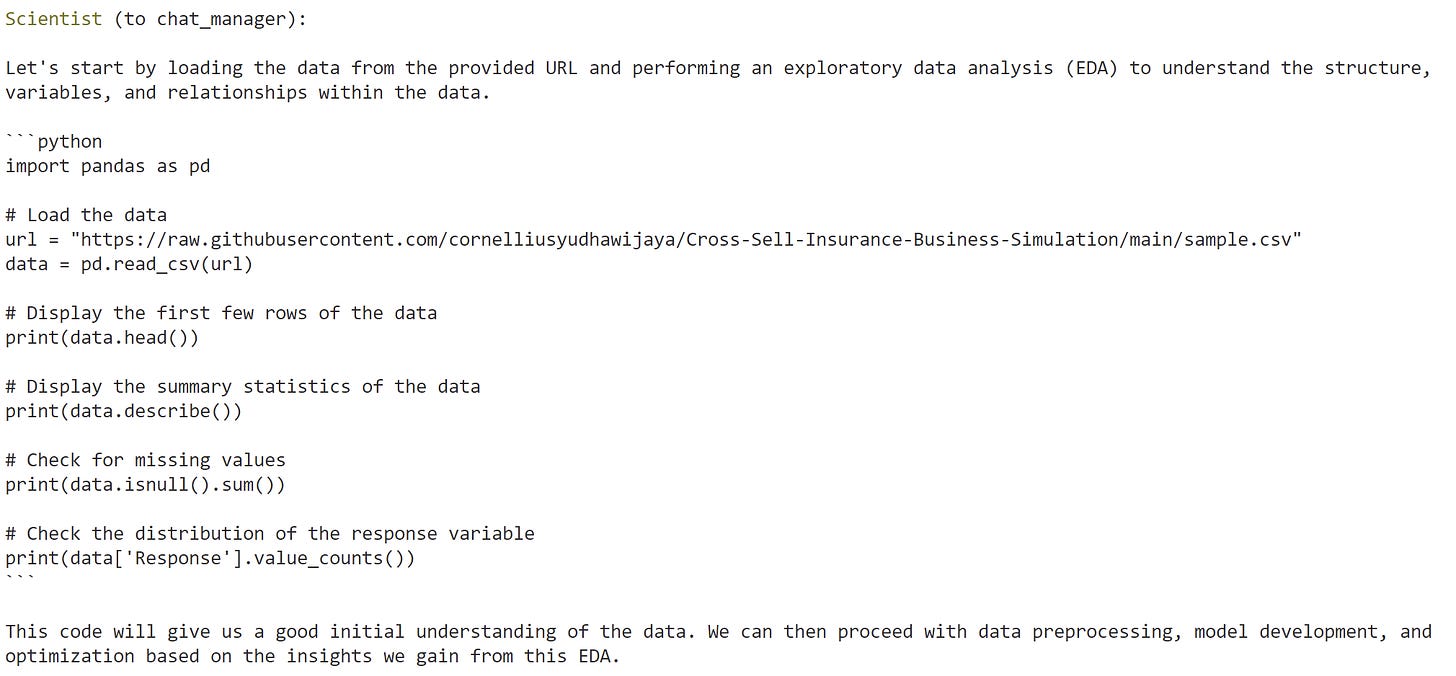

Data Scientists provide exploratory code.

The data scientist gives the code that the executor would execute.

Data Scientists provide data pipeline code.

Data scientists provide code for modeling.

Data Scientists provide code for feature selection.

The above log is an example result given the conversation between the agents.

The result seems fine, but I realize some problems with the AutoGen agents’ conversations:

The agents do not know when to stop when the task is complex,

Sometimes, the conversation becomes a repetition between each other, and it doesn't improve anything,

The cost can become higher, especially if the conversation is repeated non-stop. I think I spent close to $20 on the experiments,

You can only get the result if the task is finished.

For the above problems, I suggest two solutions that we can do:

We, as human input, give direct commands when to intervene or

Specify the expected result as specific as possible with a clear goal.

Overall, I see the potential in the agents for data scientist work. There are still many things that the developer would improve the agents framework, and we, the end user, can also customize the agents for our requirements.

I think there are still a lot of things I want to experiment with, including:

Add agents for data engineers or machine learning engineers to see how they interact,

Customize the agents with specific class functions,

Provide better prompting for the data scientist's work.

That’s all for my experiment. I hope you can get inspiration from it.

Thank you, everyone, for subscribing to my newsletter. If you have something you want me to write or discuss, please comment or directly message me through my social media!