Simple RAG Implementation With Contextual Semantic Search

NBD Lite #45 - Enhancing AI Outputs with Semantic Understanding

Hi everyone! Cornellius here, back with another Lite series. This time, we’ll explore the advanced techniques and production methods of Retrieval-Augmented Generation (RAG)—tools that will be helpful for your use cases. I will make it into a long series, so stay tuned!

I will update all the code this series uses within the RAG-To-Know repository! Don’t forget to Star and Share with your social networks.

Retrieval-augmented generation (RAG) is a framework that combines the strengths of retrieval-based systems and generative models.

Many already knew that a Large Language Model (LLM) could answer our questions or perform text-based tasks without a problem. However, sometimes, the answers are just not relevant or inaccurate. This is the case of hallucination.

By incorporating contextual semantic search into the retrieval process, RAG enhances its ability to generate relevant outputs that can be incorporated into real-world knowledge.

This article will explore a step-by-step guide to implementing a simple RAG system using contextual semantic search.

By the end, you'll have a functional RAG implementation that leverages embedding similarity for retrieval and generation.

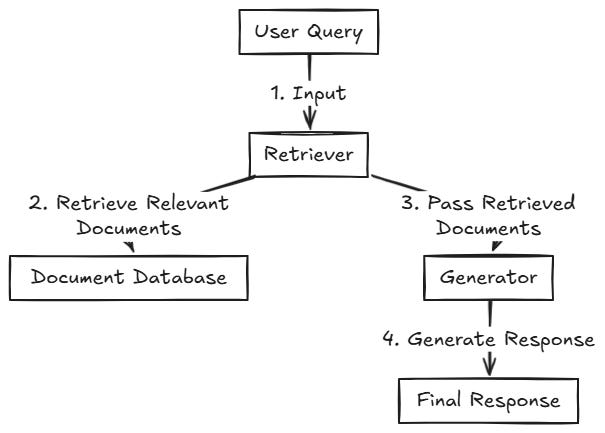

So, let’s get into it! The diagram below shows what we will try to build for reference.

Introduction to RAG and Semantic Search

If you have never heard of RAG before, it is an advanced NLP framework that combines the capabilities of information retrieval systems with LLM.

RAG addresses the limitation of LLM's static nature by using a retrieval mechanism that accesses external data sources. This provides the generative model with real-time information to produce more accurate outputs.

The RAG framework operates through a two-step process:

Retrieval

Generation.

In general, the retrieval component searches documents from the database and identifies information most relevant to the user's query. Then, the generation component utilizes this retrieved information to construct an informative response.

The basic diagram of RAG implementation is shown in the image below.

Now, the question is, how does the RAG system know how to retrieve the most relevant documents? We can employ various techniques, but the most common method is Contextual Semantic Search.

Contextual Semantic Search

Let’s discuss Contextual Semantic Search a bit.

I am sure everyone reading this article knows about search engines and uses them daily. If you input something in the search engine, they will find the most related website that matches your query.

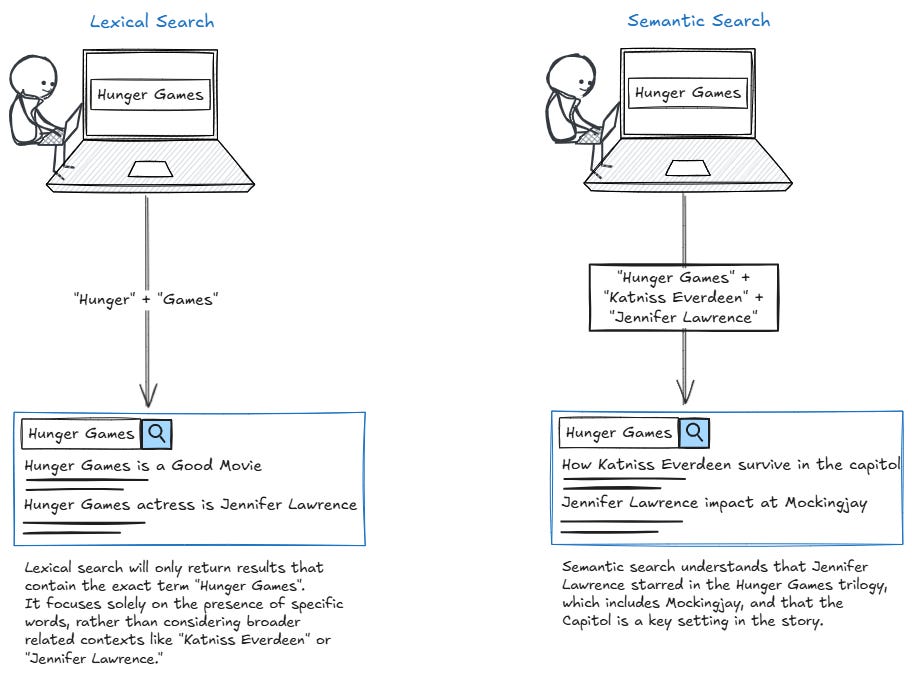

Traditionally, we use Lexical search to perform search, in which the system matches queries to documents based on exact or near-exact keyword matches. However, it will search for exact words or phrases without understanding the meaning of the query; it retrieves documents containing the same terms as the input.

Semantic search goes beyond keywords; it transforms the search engine by focusing on understanding the query's meaning, relationships, and context to retrieve more relevant results. If we compare them, you can see it in the image below.

Contextual Semantic Search Understand that words and phrases are not isolated terms but about their meaning in a specific context. Lexical search cannot understand the context, but semantic search can identify surrounding information to resolve any ambiguity.

How does semantic search understand the context? This is done via representation in vector space. Words and phrases are transformed into mathematical representations (embedding) to capture their semantic meaning, allowing similarity-based comparison.

The high-level representation of semantic search can be seen in the image below.

The core of semantic search is embeddings, which are vectorized representations of the words or phrases generated by the embedding model like BERT, GPT, and many others.

For example, in the text "Where are you going?", the embedding could be represented in a vector space with a list of 768 numbers ([0.14, 0.59, ..., 0.73]). With these numbers, we can represent all the documents and queries in vector space and perform retrieval using the similarity scoring technique.

The most common similarity scoring technique is cosine similarity, which measures the closeness between the query’s embedding and the document embeddings. We will retrieve the most relevant results by ranking the score result, which means the top-k highest score.

That’s the basic explanation; we can discuss the technical theory in another article. Let’s jump into the code implementation.

Preparation

This article will build a Simple RAG with contextual semantic search.

To build a Contextual Semantic Search RAG system, we will use several tools, including:

Chroma Vector Database for storing and querying embeddings.

Sentence Transformers for generating embeddings (vector representations) of text.

LangChain for working with text data, including text splitting and chunking.

LiteLLM library for interacting with LLMs.

PyPDF2 for extracting text from PDF files.

Let’s start by installing all the important libraries.

pip install -q chromadb pypdf2 sentence-transformers litellm langchainNext, we will import the libraries and set up the environment variables. We need the Hugging Face Token and Gemini API Key in this tutorial, so please acquire both.

import os

import PyPDF2

from sentence_transformers import SentenceTransformer

import chromadb

from chromadb.config import Settings

import litellm

from litellm import completion

from langchain.text_splitter import RecursiveCharacterTextSplitter

# # Set environment variables. Uncomment this if you want to set them directly.

# os.environ["HUGGINGFACE_TOKEN"] = "your_huggingface_token_here"

# os.environ["GEMINI_API_KEY"] = "your_gemini_api_key_here"

os.environ['LITELLM_LOG'] = 'DEBUG'

# # Retrieve environment variables

# HUGGINGFACE_TOKEN = os.getenv("HUGGINGFACE_TOKEN")

# GEMINI_API_KEY = os.getenv("GEMINI_API_KEY")In the next code, we will extract all the text from the PDF files within the dataset folder. For my example, I am using the Insurance Handbook, which you can find in the Dataset folder, but you can always change it to the other files.

def extract_text_from_pdfs(folder_path):

all_text = ""

for filename in os.listdir(folder_path):

if filename.endswith(".pdf"):

file_path = os.path.join(folder_path, filename)

with open(file_path, 'rb') as file:

reader = PyPDF2.PdfReader(file)

for page in reader.pages:

all_text += page.extract_text()

return all_text

pdf_folder = "dataset"

all_text = extract_text_from_pdfs(pdf_folder)The essence of the RAG system is to retrieve the most relevant documents to a given query. Passing all the raw text from a PDF to the LLM at once would undermine this principle because it might overwhelm the model.

The raw text is split into smaller, meaningful chunks (documents) to preserve the system's efficiency and accuracy. These chunks allow the retriever to identify and pass only the most important information to the generative model for further processing.

text_splitter = RecursiveCharacterTextSplitter(

chunk_size=500, # Size of each chunk

chunk_overlap=50, # Overlap between chunks to maintain context

separators=["\n\n", "\n", " ", ""] # Splitting hierarchy

)

chunks = text_splitter.split_text(all_text)In the code above, we split the text into chunks containing 500 characters with 50 characters overlap. However, we set the splitting hierarchy when splitting the text.

Then, we set up the Vector Database and generated embedding from all the chunks. ChromaDB will use your local environment to set up the database, so it’s good for testing, but we will need to tweak it later if we want to make it into production.

# Initialize a persistent ChromaDB client

client = chromadb.PersistentClient(path="chroma_db")

# Load the SentenceTransformer model for text embeddings

text_embedding_model = SentenceTransformer('all-MiniLM-L6-v2')

# Delete existing collection (if needed)

try:

client.delete_collection(name="knowledge_base")

print("Deleted existing collection: knowledge_base")

except Exception as e:

print(f"Collection does not exist or could not be deleted: {e}")

# Create a new collection for text embeddings

collection = client.create_collection(name="knowledge_base")

# Add text chunks to the collection

for i, chunk in enumerate(chunks):

# Generate embeddings for the chunk

embedding = text_embedding_model.encode(chunk)

# Add to the collection with metadata

collection.add(

ids=[f"chunk_{i}"], # Unique ID for each chunk

embeddings=[embedding.tolist()], # Embedding vector

metadatas=[{"source": "pdf", "chunk_id": i}], # Metadata

documents=[chunk] # Original text

)In the code above, we set the embedding database as "knowledge_base" and store all the chunks and their embeddings (plus metadata) in the vector database. This information is crucial, as it can be used later to enhance retrieval.

You are now ready to perform Contextual Semantic Search. ChromaDB makes it easy to perform the search as we only need to pass the query embedding to the vector database.

For example, here is how we retrieve the top five semantic search results.

def semantic_search(query, top_k=5):

# Generate embedding for the query

query_embedding = text_embedding_model.encode(query)

# Query the collection

results = collection.query(

query_embeddings=[query_embedding.tolist()],

n_results=top_k

)

return results

# Example query

query = "What is the insurance for car?"

results = semantic_search(query)

# Display results

for i, result in enumerate(results['documents'][0]):

print(f"Result {i+1}: {result}\n")You can see that each result is related to car insurance in some way, even if it does not specify the word “car” precisely. For example, the first result was related to the automobile.

Lastly, we will pass the top result into the Gemini model to answer our query.

# Set up LiteLLM with Gemini

def generate_response(query, context):

# Combine the query and context for the prompt

prompt = f"Query: {query}\nContext: {context}\nAnswer:"

# Call the Gemini model via LiteLLM

response = completion(

model="gemini/gemini-1.5-flash", # Use the Gemini model

messages=[{"content": prompt, "role": "user"}],

api_key= GEMINI_API_KEY

)

# Extract and return the generated text

return response['choices'][0]['message']['content']

# Retrieve the top results from semantic search

search_results = semantic_search(query)

context = "\n".join(search_results['documents'][0])

# Generate a response using the retrieved context

response = generate_response(query, context)

print("Generated Response:\n", response)Generated Response:

Based on the provided text, car insurance, or auto insurance, protects against financial loss from an accident. It's a contract where the policyholder pays a premium, and the insurance company pays for losses as defined in the policy. The insurance provides property, liability, and medical coverage. Property coverage pays for damage to or theft of the car. Liability insurance pays the other driver's costs if the policyholder is at fault. The text also mentions comprehensive coverage (damage not involving a collision) and uninsured motorists coverage.You just learn to set up your RAG system with Contextual Semantic Search!

We can further improve the results, as the output above might not fully satisfy the user’s query, or the retrieval process may miss the most relevant documents.

In the RAG-To-Know series, we’ll continue exploring more techniques. In the next installment, we’ll dive into Reranking, so stay tuned!

Is there anything else you’d like to discuss? Let’s dive into it together!

👇👇👇

Fantastic share, Cornellius! RAG is an incredibly valuable technique, and as resource optimization becomes increasingly critical, its significance will only continue to grow!

What you use to create these visuals?