Standardization vs Normalization: The Feature Scaler Role - NBD Lite #15

Feature transformation role in the machine learning world

If you are interested in more audio explanations, you can listen to the article in the AI-Generated Podcast by NotebookLM!👇👇👇

There are many Machine Learning algorithms out there, and each one has its own personal specification.

One way to fulfill the data requirement is to scale the features, especially if our algorithm is sensitive to data scale.

As feature scaling can become an important preprocessing step, we need to understand when to use it.

The common methods for feature scaling are standardization and normalization.

But how does it work? And when to use them? It’s what we would explore today! Let’s get into it.

In summary, here are the differences between standardization and normalization.

Standardization Vs. Normalization

Standardization

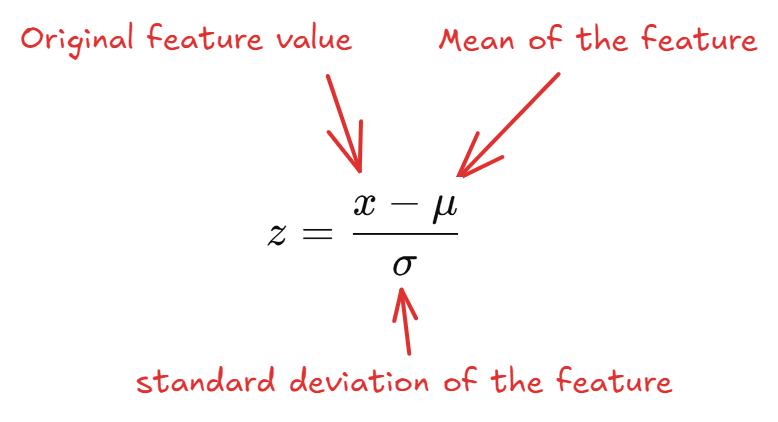

Standardization is a feature scaling technique, so the features would have a normal distribution, which means the features have a mean of 0 and a standard deviation of 1.

The formula is as follows:

We can perform standardization with the following code.

from sklearn.preprocessing import StandardScaler

standard_scaler = StandardScaler()

X_standardized = standard_scaler.fit_transform(X_train)So, when to use standardization?

Data follows a Normal distribution.

Working with ML models that assume data is normally distributed.

The algorithm with distance metrics works better with data centered around 0, such as K-Means Clustering.

Normalization

In comparison with Standardization, Normalization is a feature scaling method that rescales the values of features to an expected fixed range, e.g., [0, 1] or [-1, 1].

The formula is as follows:

We can perform Normalization with the following code:

from sklearn.preprocessing import MinMaxScaler

min_max_scaler = MinMaxScaler(feature_range=(-1, 1))

X_train_normalized = min_max_scaler.fit_transform(X_train)So, when to use normalization?

Data doesn’t follow a normal distribution.

Working with models that rely on a predefined range, such as neural networks.

The features have different units, and we want to prevent features with larger ranges from dominating the model.

We need the data to follow a fixed range.

Potential Pitfalls

There are several pitfalls and considerations before we perform feature scaling; there are:

Feature scaling involves changing the original data into another form, which can lead to a loss of information when used.

Data leakage can happen if we apply the scaler before we split the dataset.

Outliers affect the feature scaling process. Normalization was significantly affected by outliers, so standardization might be more appropriate. Although, too extreme outlier can also affect standardization as it based on the mean.

That’s all for today! I hope this helps you understand how to use Normalization and Standardization.

Are there any more things you would love to discuss? Let’s talk about it together!

👇👇👇

Really great breakdown Cornellius!

It was good for me to get a refresher since I’m not building models that often