What Would I do to Learn Large Language Model (LLM) from the Beginning

Reflecting on my experience with learning new technology

🔥Reading Time: 3 Minute🔥

🔥Benefit Time: A lot of Time🔥

It’s been over a year since Generative AI tools such as ChatGPT, Midjourney, and Runway took over the world. I can’t even remember how I could work without the assistance of my LLM model. I am sure you guys also feel the benefit one way or another.

With how much LLM is becoming part of our world, it’s beneficial to understand them. I must admit that I am one of the late bloomers of LLM, as I have only known about it in depth in recent years, but I came around by learning about it myself.

However, sometimes I think, “How could I learn better if I start over from the beginning?”.

From that question, I want to write about my recommendation and action plan for learning about LLM again.

With that in mind, let’s get into it.

LLM Learning Recommendation

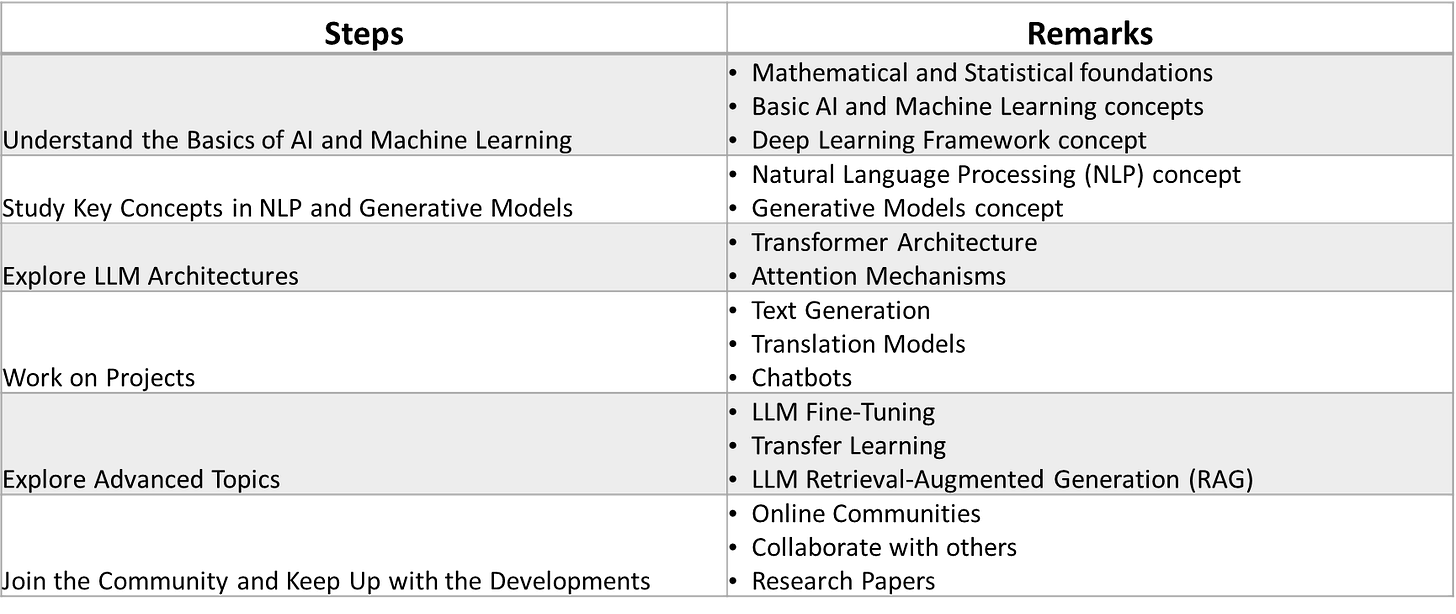

In this article, I will explore a few steps and recommendations if I would start over learning about LLM. For those who want the short version, here is the step-by-step summary of what I would do.

Let's explore the table above if you want me to elaborate briefly.

Read until the end to see the FREE resources you can take to learn LLM.

👇👇👇

Understand the Basics of AI and Machine Learning

Before learning about LLM, I worked as a data scientist, so I understand AI and Machine Learning well.

However, I realize I would have been lost without them if I had jumped directly to learning LLM. For that reason, I think there are a few basic stuff I would try learning immediately. If I grouping them, it would be:

Mathematical and Statistical Foundations: Subjects such as linear algebra, calculus, probability, descriptive statistics, and inferential statistics.

Machine Learning Basics: Try to understand supervised and unsupervised learning, neural networks, and deep learning. You also need to know how to perform model training and evaluation.

Deep Learning Framework Concepts: Try out a few deep learning framework libraries such as PyTorch or TensorFlow.

Here are some FREE resources you can use to learn the above.

👉HarvardX: Data Science: Inference and Modeling

👉Mathematics for Machine Learning book

👉Zero to Mastery Learn PyTorch for Deep Learning

Study Key Concepts in NLP and Generative Models

After learning the basics, we want to focus more on the specific LLM—NLP and Generative Models field. These two concepts will become the foundation for understanding the large language model (LLM).

Natural Language Processing (NLP): Learn NLP concepts and techniques such as tokenization, embedding, sequence models, and attention mechanisms.

Generative Models: Learn about different generative models like GANs, VAEs, and autoregressive models.

Here are some FREE resources you can use to learn the above.

👉CS224N: Natural Language Processing with Deep Learning

Explore LLM Architectures

We should start learning the LLM architectures once we have the foundations for machine learning, NLP, and Generative AI.

Before we move on to the application, it’s essential to understand what makes LLM unique, especially from theoretical and structural POVs.

Transformer Architecture: Learning the architecture behind models like GPT and BERT and their variants would be beneficial.

Attention Mechanisms: Understanding the attention mechanism and the concepts such as self-attention, multi-head attention, and positional encoding.

Here are some FREE resources you can use to learn the above.

👉LLM Courses by Maxime Labonne

👉From Transformer to LLM: Architecture, Training and Usage

Work on Projects

The best way to learn is by using everything we know in the projects. By putting the concept into the application, we can understand better. Here are some simple projects you can start doing right now.

Text Generation: Create a simple text generator using LLM, such as GPT-3 or Llama.

Translation Models: Build a translation model using existing datasets.

Chatbots: Develop a conversational agent using the pre-trained models for specific tasks.

It’s an example project; of course, you can try out something else for you to develop.

Here are some FREE resources you can use to learn the above.

👉How to Build LLM Applications with LangChain Tutorial

👉Build Your Translator with LLMs & Hugging Face

👉Build an LLM RAG Chatbot With LangChain

Explore Advanced Topics

The LLM learning isn’t over; we can explore many advanced topics to improve the result and diversify the use cases. Here are some advanced topics that we can explore:

Fine-Tuning: Learn how to fine-tune models for specific tasks and datasets.

Transfer Learning: Understand how to leverage pre-trained models for various NLP tasks.

LLM Retrieval-Augmented Generation (RAG): Study how reinforcement learning is used in generative models.

Here are some FREE resources you can use to learn the above.

👉Activeloop Fine-Tuning LLM Course

👉Activeloop Retrieval Augmented Generation for Production with LangChain & LlamaIndex

👉Transfer Learning for NLP with TensorFlow Hub

👉Transfer Learning from Large Language Models (LLMs)

Join the Community and Keep Up with the Research

Lastly, keep up-to-date with everything in the LLM spaces, either via the community or from research papers, preferably both. We can stop learning, so we need to upskill ourselves constantly. For example, here are some of my recommendations for the community and the research papers:

👉HuggingFace Community Discord

Articles to Read

Here are some of my latest articles you might miss this week.

How to Handle Time Zones and Timestamps Accurately with Pandas

How to Use the pivot_table Function for Advanced Data Summarization in Pandas

Personal Notes

It’s been flu season lately, and I have been afflicted this week. Whenever I get the flu, it takes some time to recover, so my writing here and on social media has been kind of slow lately.

Take care of yourselves!

Please leave a comment or join the chat if you want to discuss things with me.

Thank you for sharing Cornellius! One to bookmark for myself!

The training process for large models mainly includes the following stages:

1. Pretraining Stage

2. Tokenizer Training

3. Language Model Pretraining

4. Dataset Cleaning

5. Model Performance Evaluation

6. Instruction Tuning Stage

7. Open Source Dataset Organization

8. Model Evaluation Methods